A Berkeley Computer Science lab just uploaded “Approaching Human-Level Forecasting

with Language Models” to arXiv DOI:10.48550/arXiv.2402.18563. My take:

There were three things that helped: Study, Scale,

and Search, but the greatest of these is Search.

Halawi et al replicated earlier results that off-the-shelf LLMs can’t forecast, then showed how to make them better. Quickly:

- A coin toss gets a squared error score of 0.25.

- Off-the-shelf LLMs are nearly that bad.

- Except GPT-4 that got 0.208.

- With web search and fine tuning, the best LLMs got down to 0.179.

- The (human) crowd average was 0.149.

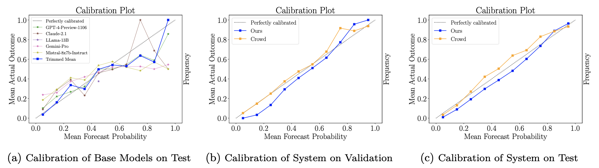

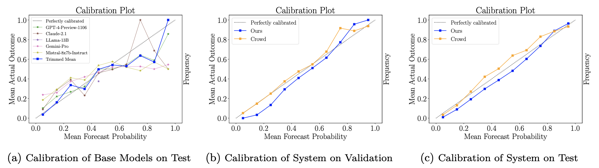

Adding news search and fine tuning, the LLMs were decent (Brier .179), and well-calibrated. Not nearly as good as the crowd (.149), but probably (I’m guessing) better than the median forecaster – most of crowd accuracy is usually carried by the top few %. I’m surprised by the calibration.

Search

By far the biggest gain was adding Info-Retrieval (Brier .206 -> .186), especially when it found at least 5 relevant articles.

With respect to retrieval, our system nears the performance of the crowd when there are at least 5 relevant articles. We further observe that as the number of articles increases, our Brier score improves and surpasses the crowd’s (Figure 4a). Intuitively, our system relies on high-quality retrieval, and when conditioned on more articles, it performs better.

Note: they worked to avoid information leakage. The test set only used questions published after the models' cutoff date, and they did sanity checks to ensure the model didn’t already know events after the cutoff date (and did know events before it!). New retrieval used APIs that allowed cutoff dates, so they could simulate more information becoming available during the life of the question. Retrieval dates were sampled based on advertised question closing date, not actual resolution.

Study:

Fine-tuning the model improved versus baseline: (.186 -> .179) for the full system, with variants at 0.181-0.183. If I understand correctly, it was trained on model forecasts of training data which had outperformed the crowd but not by too much, to mitigate overconfidence.

That last adjustment – good but not too good – suggests there are still a lot of judgmental knobs to twiddle, risking a garden of forking paths. However, assuming no actual flaws like information leakage, the paper stands as an existence proof of decent forecasting, though not a representative sample of what replication-of-method would find.

Scale:

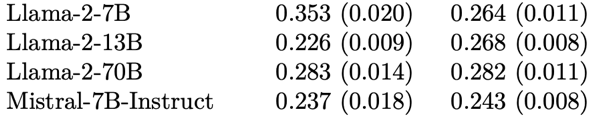

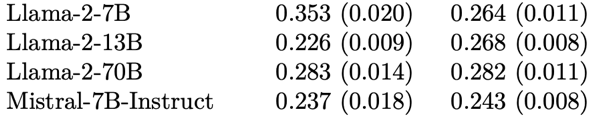

GPT4 did a little better than GPT3.5. (.179 vs .182). And a lot better than lunchbox models like Llama-2.

But it’s not just scale, as you can see below: Llama’s 13B model outperforms its 70B, by a lot. Maybe sophistication would be a better word, but that’s too many syllables for a slogan.

Thoughts

Calibration: was surprisingly good. Some of that probably comes from the careful selection of forecasts to fine-tune from, and some likely from the crowd-within style setup where the model forecast is the trimmed mean from at least 16 different forecasts it generated for the question. [Update: the [Schoenegger et al] paper (also this week) ensembled 12 different LLMs and got worse calibration. Fine tuning has my vote now.]

Forking Paths: They did a hyperparameter search to optimize system configuration like the the choice of aggregation function (trimmed mean), as well as retrieval and prompting strategies. This bothers me less than it might because (1) adding good article retrieval matters more than all the other steps; (2) hyperparameter search can itself be specified and replicated (though I bet the choice of good-but-not-great for training forecasts was ad hoc), and (3) the paper is an existence proof.

Quality: It’s about 20% worse than the crowd forecast. I would like to know where it forms in crowd %ile. However, it’s different enough from the crowd that mixing them at 4:1 (crowd:model) improved the aggregate.

They note the system had biggest Brier gains when the crowd was guessing near 0.5, but I’m unimpressed. (1) this seems of little practical importance, especially if those questions really are uncertain, (2) it’s still only taking Brier of .24 -> .237, nothing to write home about, and (3) it’s too post-hoc, drawing the target after taking the shots.

Overall: A surprising amount of forecasting is nowcasting, and this is something LLMs with good search and inference could indeed get good at. At minimum they could do the initial sweep on a question, or set better priors. I would imagine that marrying LLMs with Argument Mapping could improve things even more.

This paper looks like a good start.