Bari Weiss: Why DEI Must End for Good

I’m afraid she’s right. Worth watching or reading in entirety.

Bari Weiss: Why DEI Must End for Good

I’m afraid she’s right. Worth watching or reading in entirety.

| ❝ | LLMs aren't people, but they act a lot more like people than logical machines. |

Linda McIver and Cory Doctorow do not buy the AI hype.

McIver ChatGPT is an evolutionary dead end:

As I have noted in the past, these systems are not intelligent. They do not think. They do not understand language. They literally choose a statistically likely next word, using the vast amounts of text they have cheerfully stolen from the internet as their source.

Doctorow’s Autocomplete Worshippers:

AI has all the hallmarks of a classic pump-and-dump, starting with terminology. AI isn’t “artificial” and it’s not “intelligent.” “Machine learning” doesn’t learn. On this week’s Trashfuture podcast, they made an excellent (and profane and hilarious) case that ChatGPT is best understood as a sophisticated form of autocomplete – not our new robot overlord.

Not so fast. First, AI systems do understand text, though not the real-world referents. Although LLMs were trained by choosing the most likely word, they do more. Representations matter. How you choose the most likely word matters. A very large word frequency table could predict the most likely word, but it couldn’t do novel word algebra (king - man + woman = ___) or any of the other things that LLMs do.

Second, McIver and Doctorow trade on their expertise to make their debunking claim: we understand AI. But that won’t do. As David Mandel notes in a recent preprint AI Risk is the only existential risk where the experts in the field rate it riskier than informed outsiders.

Google’s Peter Norvig clearly understands AI. And he and colleagues argue they’re already general, if limited:

Artificial General Intelligence (AGI) means many different things to different people, but the most important parts of it have already been achieved by the current generation of advanced AI large language models such as ChatGPT, Bard, LLaMA and Claude. …today’s frontier models perform competently even on novel tasks they were not trained for, crossing a threshold that previous generations of AI and supervised deep learning systems never managed. Decades from now, they will be recognized as the first true examples of AGI, just as the 1945 ENIAC is now recognized as the first true general-purpose electronic computer.

That doesn’t mean he’s right, only that knowing how LLMs work doesn’t automatically dispel claims.

Meta’s Yann LeCun clearly understands AI. He sides with McIver & Doctorow that AI is dumber than cats, and argues there’s a regulatory-capture game going on. (Meta wants more openness, FYI.)

Demands to police AI stemmed from the “superiority complex” of some of the leading tech companies that argued that only they could be trusted to develop AI safely, LeCun said. “I think that’s incredibly arrogant. And I think the exact opposite,” he said in an interview for the FT’s forthcoming Tech Tonic podcast series.

Regulating leading-edge AI models today would be like regulating the jet airline industry in 1925 when such aeroplanes had not even been invented, he said. “The debate on existential risk is very premature until we have a design for a system that can even rival a cat in terms of learning capabilities, which we don’t have at the moment,” he said.

Could a system be dumber than cats and still general?

McIver again:

There is no viable path from this statistical threshing machine to an intelligent system. You cannot refine statistical plausibility into independent thought. You can only refine it into increased plausibility.

I don’t think McIver was trying to spell out the argument in that short post, but as stated this begs the question. Perhaps you can’t get life from dead matter. Perhaps you can. The argument cannot be, “It can’t be intelligent if I understand the parts”.

Doctorow refers to Ted Chiang’s “instant classic”, ChatGPT Is a Blurry JPEG of the Web

[AI] hallucinations are compression artifacts, but—like the incorrect labels generated by the Xerox photocopier—they are plausible enough that identifying them requires comparing them against the originals, which in this case means either the Web or our own knowledge of the world.

I think that does a good job at correcting many mistaken impressions, and correctly deflating things a bit. But also, that “Blurry JPEG” is key to LLM’s abilities: they are compressing their world, be it images, videos, or text. That is, they are making models of it. As Doctorow notes,

Except in some edge cases, these systems don’t store copies of the images they analyze, nor do they reproduce them.

They gist them. Not necessarily the way humans do, but analogously. Those models let them abstract, reason, and create novelty. Compression doesn’t guarantee intelligence, but it is closely related.

Two main limitations of AI right now:

Why not use a century of experience with cognitive measures (PDF) to help quantify AI abilities and gaps?

~ ~ ~

A interesting tangent: Doctorow’s piece covers copyright. He thinks that

Under these [current market] conditions, giving a creator more copyright is like giving a bullied schoolkid extra lunch money.

…there are loud, insistent calls … that training a machine-learning system is a copyright infringement.

This is a bad theory. First, it’s bad as a matter of copyright law. Fundamentally, machine learning … [is] a math-heavy version of what every creator does: analyze how the works they admire are made, so they can make their own new works.

So any law against this would undo what wins creators have had over conglomerates regarding fair use and derivative works.

Turning every part of the creative process into “IP” hasn’t made creators better off. All that’s it’s accomplished is to make it harder to create without taking terms from a giant corporation, whose terms inevitably include forcing you to trade all your IP away to them. That’s something that Spider Robinson prophesied in his Hugo-winning 1982 story, “Melancholy Elephants”.

| ❝ | So if you hear that 60% of papers in your field don’t replicate, shouldn't you care a lot about which ones? Why didn't my colleagues and I immediately open up that paper's supplement, click on the 100 links, and check whether any of our most beloved findings died? _~A. Mastroianni_ |

HTT to the well-read Robert Horn for the link.

After replication failures and more recent accounts of fraud, Elizabeth Gilbert & Nick Hobson ask, Is psychology good for anything?

If the entire field of psychology disappeared today, would it matter? …

Adam Mastroianni, a postdoctoral research scholar at Columbia Business School says: meh, not really.

At the time I replied with something like this:

~ ~ ~ ~ ~

There’s truth to this. I think Taleb noted that Shakespeare and Aeschylus are keener observers of the human condition than the average academic. But it’s good to remember there are useful things in psychology, as Gilbert & Hobson note near the end.

I might add the Weber-Fechner law and effects that reveal mental mechanisms, like:

Losing the Weber-Fechner would be like losing Newton: $F = m a$ reset default motion from stasis to inertia. $p = k log (S/S0)$ – reset sensation from absolute to relative.

7±2 could be 8±3 or 6±1, and there’s chunking. But to lose the idea that short-term memory has but a few fleeting registers would quake the field. And when the default is only ~7, losing or gaining a few is huge.

Stroop, mental rotation, deficits, & brain function are a mix of observation and implied theory. Removing some of this is erasing the moons of Jupiter: stubborn bright spots that rule out theories and strongly suggest alternatives. Stroop is “just” an illusion – but its existence limits independence of processing. Stroop’s cousins in visual search have practical applications from combat & rescue to user-interface design.

Likewise, that brains rotate images constrains mechanism, and informs dyslexic-friendly fonts and interface design.

Neural signals are too slow to track major-league fastballs. But batters can hit them. That helped find some clever signal processing hacks that helps animals perceive moving objects slightly ahead of where they are.

~ ~ ~

But yes, on the whole psychology is observation-rich and theory-poor: cards tiled in a mosaic, not built into houses.

I opened with Mastroanni’s plane crash analogy – if you heard that 60% of your relatives died in a plane crash, pretty soon you’d want to know which ones.

It’s damning that psychology needn’t much care.

| ❝ | Would studies like this be better if they always did all their stats perfectly? Of course. But the real improvement would be not doing this kind of study at all. |

~ Adam Mastroianni, There are no statistics in the kingdom of God

| ❝ | And that’s why mistakes had to be corrected. BASF fully recognized that Ostwald would be annoyed by criticism of his work. But they couldn’t tiptoe around it, because they were trying to make ammonia from water and air. If Ostwald’s work couldn’t help them do that, then they couldn’t get into the fertilizer and explosives business. They couldn’t make bread from air. And they couldn’t pay Ostwald royalties. If the work wasn’t right, it was useless to everyone, including Ostwald. |

A friend sent me 250th Anniversary Boston Tea Party tea for the 16th.

The tea must be authentic: it’s appears to have been intercepted in transit.

Adam Russell created the DARPA SCORE replication project. Here he reflects on the importance of Intelligible Failure.

[Advanced Research Projects Agencies] need intelligible failure to learn from the bets they take. And that means evaluating risks taken (or not) and understanding—not merely observing—failures achieved, which requires both brains and guts. That brings me back to the hardest problem in making failure intelligible: ourselves. Perhaps the neologism we really need going forward is for intelligible failure itself—to distinguish it, as a virtue, from the kind of failure that we never want to celebrate: the unintelligible failure, immeasurable, born of sloppiness, carelessness, expediency, low standards, or incompetence, with no way to know how or even if it contributed to real progress.

Came across an older Alan Jacobs post:

For those who have been formed largely by the mythical core of human culture, disagreement and alternative points of view may well appear to them not as matters for rational adjudication but as defilement from which they must be cleansed.

It also has a section on The mythical core as lossy compression.

Today I listened to Sam Harris and read Alan Jacobs on Israel & Gaza. Highly recommended.

(With luck “Micropost” will link Jacobs here.)

Huh. Moonlight is redder than sunlight. The “silvery moon” is an illusion. iopscience.iop.org/article/1…

This looks like a good way to appreciate the beautiful bright thing that periodically makes it hard to see nebulas. blog.jatan.space

Sabine Hossenfelder’s Do your own research… but do it right is an excellent guide to critical thinking and a helpful antidote to the meme that no one should “do your own research”.

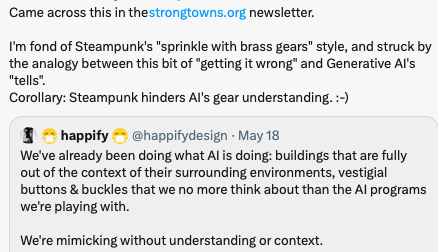

Good essay by Peter Norton Why we need Strong Towns critiquing a Current Affairs piece by Allison Dean.

I side with Norton here: Dean falls into the trap of demanding ideological purity. If you have to read only one, pick Norton. But after donning your Norton spectacles, read Dean for a solid discussion of points of overlap, reinterpreting critiques as debate about the best way to reach shared goals.

This other response to Dean attempts to add some middle ground to Dean’s Savannah and Flint examples, hinting what a reframed critique might look like. Unfortunately it’s long and meanders, and grinds its own axen.

I suspect Dean is allergic to economic justifications like “wealth” and “prosperity”. But we want our communities to thrive, and valuing prosperity is no more yearning for Dickensian hellscapes than loving community is pining for totalitarian ones.

The wonderful, walkable, wish-I-lived-there communities on Not Just Bikes are thriving, apparently in large part by sensible people-oriented design. More of that please.

(And watch Not Just Bikes for a more approachable take on Strong Towns, and examples of success.)

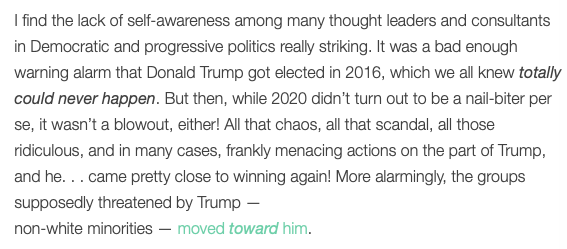

In a recent newsletter, Jesse Singal notes that recent MAGA gains among Democratic constituencies should prompt progressives to pause and question their own political assumptions & theories:

It is quite a string of anomalies. A scientist would be prompted to look for alternate theories.

Singal suggests part of the problem is essentialism:

…activists and others like to talk and write about race in the deeply essentialist and condescending and tokenizing way… It’s everywhere, and it has absolutely exploded during the Trump years.

…both right-wing racists and left-of-center social justice types, [tend] to flatten groups of hundreds of millions of people into borderline useless categories, and to then pretend they share some sort of essence…

The irony.

~~~

Aside: I’m also reminded of a grad school story from Ruth.

Zeno: …Descartes is being an essentialist here…

Ruth: Wait, no, I think you’re being the essentialist….

_____: I’m sorry, what’s an essentialist?

Zeno: [short pause] It is a derogatory term.

(Yes, we had a philosophy teacher named Zeno. I’m not sure if the subject was actually Descartes.)

In The bias we swim in, Linda McIver notes:

Recently I saw a post going around about how ChatGPT assumed that, in the sentence “The paralegal married the attorney because she was pregnant.” “she” had to refer to the paralegal. It went through multiple contortions to justify its assumption…

Her own conversation with ChatGPT was not as bad as the one making the rounds, but still self-contradictory.

Of course it makes the common gender mistake. What amazes me are the contorted justifications. What skin off AIs nose to say, “Yeah, OK, ‘she’ could be the attorney”? But it’s also read responses and learned that humans get embarrassed and move to self defense.

~~~~~

If justifying, it could do better. Surely someone must have written how the framing highlights the power dynamic. And I find this reading less plausible:

The underling married his much better-paid boss BECAUSE she was pregnant.

At least, it’s not the same BECAUSE implied in the original.

Reflection for sheltered people like me:

| ❝ |

For my entire life, and a bit more, … virtually all of us [in the US] have been living in a bubble “outside of history.” Hardly anyone you know, including yourself, is prepared to live in actual “moving” history. It will panic many of us, disorient the rest of us, and cause great upheavals in our fortunes, both good and bad. In my view, the good will considerably outweigh the bad (at least from losing #2 [absence of radical techological change], not #1 [American hegemony]), but I do understand that the absolute quantity of the bad disruptions will be high. ~Tyler Cowen, “There is no turning back on AI” Free Press Marginal Rev

|

Laura points out that even in the US, a sizable minority have been living in “actual history”.

Alan Jacobs, The HedgeHog Review, “David Hume’s Guide to Social Media: Emancipation by the cultivation of taste.”

Alan Jacobs reminds us to eschew easy opinions (re: Supreme court here): blog.ayjay.org/reading-s…

…in which minutes are kept and hours are lost. 😉

AstralCodex on nerds and hipsters:

Revisited my old post about reconstructing Syrotuck’s (lost) Lost Person Data. Mediocre writing, but I still like the basic data detective work we did.

Bookmark: Haidt on Why the mental health of liberal girls sank first and fastest.

Based on the abstract, it seems Alexander Bird’s Understanding the replication crisis as a base rate fallacy has it backwards. Is there reason to dig into the paper?

He notes a core feature of the crisis:

If most of the hypotheses under test are false, then there will be many false hypotheses that are apparently supported by the outcomes of well conducted experiments and null hypothesis significance tests with a type-I error rate (α) of 5%.

Then he says this solves the problem:

Failure to recognize this is to commit the fallacy of ignoring the base rate.

But it merely states the problem: Why most published research findings are false.

Fascinating discussion of Lord of the Rings:

But whatever it is, it seems to whisper of the sovereignty of mercy above that of legal decree. It shows us a world in which penalties of death are declared, but are then abrogated by the wise and kind.

And on this theme, Laura reminds me of the classic essay, Frodo didn’t fail

I think Jacobs has the right of it where he stakes a claim, but there’s much else in Roberts' pieces. Including this marvelous merger of Eowyn’s speech at Pelennor and the Gettysburg address.

It seemed that Dernhelm laughed, and the clear voice was like the ring of steel. ‘But no living man am I! You look upon a woman. Eowyn I am, Eomund’s daughter. You stand between me and my lord and kin. Begone, if you be not deathless! For living or dark undead, I will smite you, if you touch him. It is for us the living, rather, to be dedicated here to the unfinished work which they who fought here have thus far so nobly advanced. It is rather for us to be here dedicated to the great task remaining before us — that from these honored dead we take increased devotion to that cause for which they gave the last full measure of devotion — that we here highly resolve that these dead shall not have died in vain. That &c. &c.’

Roberts prefers the punchier movie version, “I am no man”. Fair enough! But in musing on that I found a fantastic 2015 essay by Mary Huening, '“I am no man” doesn’t cut it' that lays out precisely what we lose about Eowyn in the movies.