| ❝ | That Western civilisation as we know it owes a very great deal to those, Augustine not least, who generated a vision of work, prayer and belief that overcame the decay and collapse of the Roman Empire. |

| ❝ | That Western civilisation as we know it owes a very great deal to those, Augustine not least, who generated a vision of work, prayer and belief that overcame the decay and collapse of the Roman Empire. |

| ❝ | The problem with all of us being so much more a hive mind than we realize is that knowledge problems that ripple across our a information ecosystems can become not just threats to individuals with weak character or bad habits, but the equivalent of colony collapse.† |

~Erin Kissane [Landslide: a ghost story](https://www.wrecka.ge/landslide-a-ghost-story/)

A reflection on Chesterton’s fence from Farnam Street.

The fence:

There exists in such a case a certain institution or law; let us say, for the sake of simplicity, a fence or gate erected across a road. The more modern type of reformer goes gaily up to it and says, “I don’t see the use of this; let us clear it away.” To which the more intelligent type of reformer will do well to answer: “If you don’t see the use of it, I certainly won’t let you clear it away. Go away and think. Then, when you can come back and tell me that you do see the use of it, I may allow you to destroy it.”

Note: read this paper: stronger LLMs exhibit more cognitive bias. If robust, that would be a very promising result., as I noted in my comment to Kris Wheaton here.

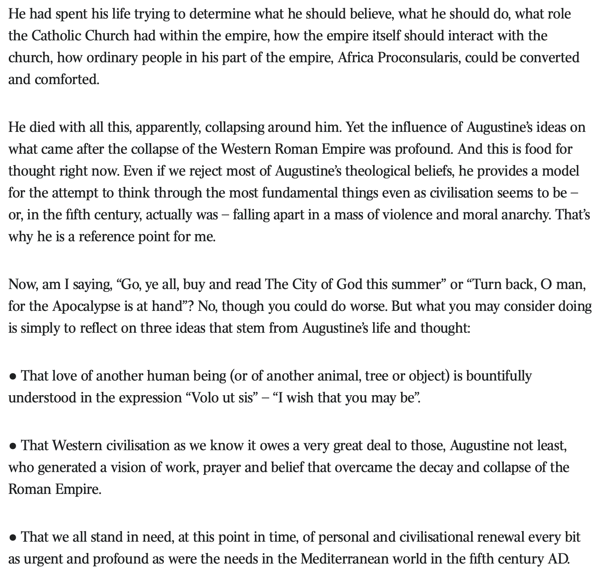

Some perspective from Alan Jacobs:

| ❝ | I don’t believe there’s anything more morally corrupting that an utterly single-minded focus on defeating your political enemies, even when those political enemies really deserve to be defeated. |

…because

| ❝ | only fully human persons, persons formed by wide and generous encounters with the whole of humanity, are able to think and act wisely in the political realm. |

~A. Jacobs, What I’ll Be Doing

See also Breaking Bread With the Dead

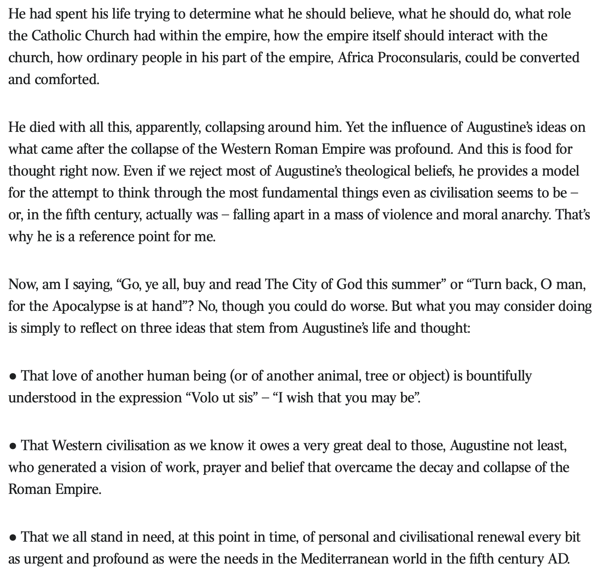

| ❝ | If justice is not based on the facts, if principles of justice are not applied universally, there is no real justice. |

Alice Dreger "Darkness’s Descent on the American Anthropological Association", Hum Nat 2011, pp.243-244.

She expands on in the excellent Galileo’s Middle Finger📚.

For some reason it’s hard to hold this in mind.

| ❝ | In MAGAworld, declarative statements ... serve as identity markers.... They are not for conveying Facts, Truth, Reality.... Whether ... Democrats have and deploy weather weapons could not be more irrelevant; what matters is that _this is the kind of thing we say about Democrats_ |

Would people who talk about weather weapons agree?

And was “Defund the police” similar?

Daniel Lakens on why we won’t move beyond p<.05. Key: few really offered alternatives.

Part 2 of an excellent 2-part reflection on the APA special issue 5 years ago.

| ❝ | And that’s why mistakes had to be corrected. BASF fully recognized that Ostwald would be annoyed by criticism of his work. But they couldn’t tiptoe around it, because they were trying to make ammonia from water and air. If Ostwald’s work couldn’t help them do that, then they couldn’t get into the fertilizer and explosives business. They couldn’t make bread from air. And they couldn’t pay Ostwald royalties. If the work wasn’t right, it was useless to everyone, including Ostwald. |

Adam Russell created the DARPA SCORE replication project. Here he reflects on the importance of Intelligible Failure.

[Advanced Research Projects Agencies] need intelligible failure to learn from the bets they take. And that means evaluating risks taken (or not) and understanding—not merely observing—failures achieved, which requires both brains and guts. That brings me back to the hardest problem in making failure intelligible: ourselves. Perhaps the neologism we really need going forward is for intelligible failure itself—to distinguish it, as a virtue, from the kind of failure that we never want to celebrate: the unintelligible failure, immeasurable, born of sloppiness, carelessness, expediency, low standards, or incompetence, with no way to know how or even if it contributed to real progress.

Sabine Hossenfelder’s Do your own research… but do it right is an excellent guide to critical thinking and a helpful antidote to the meme that no one should “do your own research”.

Alan Jacobs' Two versions of covid skepticism summarizes a longer piece by Madeleine Kearns. Both are worth reading.

To his quotes I’ll add the core folly Kearns charges both the Covidians and the Skeptics with:

| ❝ |

by making problems that are in essence forever with us seem like a unique historical rupture.

|

Headlines about the death of theory are philosopher clickbait. Fortunately Laura Spinney’s article is more self-aware than the headline:

| ❝ | But Anderson’s [2008] prediction of the end of theory looks to have been premature – or maybe his thesis was itself an oversimplification. There are several reasons why theory refuses to die, despite the successes of such theory-free prediction engines as Facebook and AlphaFold. All are illuminating, because they force us to ask: what’s the best way to acquire knowledge and where does science go from here? |

(Note: Laura Spinney also wrote Pale Rider, a history of the 1918 flu.)

Forget Facebook for a moment. Image classification is the undisputed success of black-box AI: we don’t know how to write a program to recognize cats, but we can train a neural net on lots of picture and “automate the ineffable”.

But we’ve had theory-less ways to recognize cat images for millions of years. Heck, we have recorded images of cats from thousands of years ago. Automating the ineffable, in Kozyrkov’s lovely phrase, is unspeakably cool, but it has no bearing on the death of theory. It just lets machines do theory-free what we’ve been doing theory-free already.

The problem with black boxes is supposedly that we don’t understand what they’re doing. Hence DARPA’s “Third Wave” of Explainable AI. Kozyrkov thinks testing is better than explaining - after all we trust humans and they can’t explain what they’re doing.

I’m more with DARPA than Kozyrkov here: explainable is important because it tells us how to anticipate failure. We trust inexplicable humans because we basically understand their failure modes. We’re limited, but not fragile.

But theory doesn’t mean understanding anyway. That cat got out of the bag with quantum mechanics. Ahem.

Apparently the whole of quantum theory follows from startlingly simple assumptions about information. That makes for a fascinating new Argument from Design, with the twist that the universe was designed for non-humans, because humans neither grasp the theory nor the world it describes. Most of us don’t understand quantum. Well maybe Feynman, though even he suggested he might not really understand.

Though Feynman and others seem happy to be instrumentalist about theory. Maybe derivability is enough. It is a kind of understanding, and we might grant that to quantum.

But then why not grant it to black-box AI? Just because the final thing is a pile of linear algebra rather than a few differential equations?

I think it was Wheeler or Penrose – one of those types anyway – who imagined we met clearly advanced aliens who also seemed to have answered most of our open mathematical questions.

And then imagined our disappointment when we discovered that their highly practical proofs amounted to using fast computers to show they held for all numbers tried so far. However large that bound was, we should be rightly disappointed by their lack of ambition and rigor.

Theory-free is science-free. A colleague (Richard de Rozario) opined that “theory-free science” is a category error. It confuses science with prediction, when science is also the framework where we test predictions, and the error-correction system for generating theories.

Three examples from the article:

Certainly. Since the 1970s when Meehl showed that simple linear regressions could outpredict psychiatrists, clinicians, and other professionals. In later work he showed they could do that even if the parameters were random.

So beating these humans isn’t prediction trumping theory. It’s just showing disciplines with really bad theory.

I admire Tom Griffiths, and any work he does. He’s one of the top cognitive scientists around, and using neural nets to probe the gaps in prospect theory is clever; whether it yield epicycles or breakthroughs it should advance the field.

He’s right that more data means you can support more epicycles. But basic insights Wallace’s MML remain: if the sum of your theory + data is not smaller than the data, you don’t have an explanation.

AlphaFold’s jumping-off point was the ability of human gamers to out-fold traditional models. The gamers intuitively discovered patterns – though they couldn’t fully articulate them. So this was just another case of automating the ineffable.

But the deep nets that do this are still fragile – they fail in surprising ways that humans don’t, and they are subject to bizarre hacks, because their ineffable theory just isn’t strong enough. Not yet anyway.

So we see that while half of success of Deep Nets is Moore’s law and Thank God for Gamers, the other half is tricks to regularize the model.

That is, to reduce its flexibility.

I daresay, to push it towards theory.

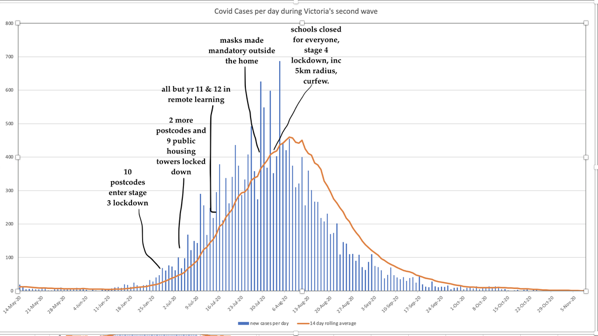

Watching Sydney’s Delta cases repeat the early-phase exponential growth of Melbourne, ADSEI’s Linda McIver asks:

Would our collective understanding of covid have been different if we were all more data literate?

Almost certainly, and I’m all for it. But would that avoid

watching Sydney try all of the “can we avoid really seriously locking down” strategies that we know failed us, … like a cinema audience shouting at the screen,

Not necessarily. Probably not, even, but that’s OK. It would still be a huge step forward to acknowledge the data and decide based on costs, values, and uncertainties. I’m fine with Sydney hypothetically saying,

| ❝ | You're right, it's likely exponential, but we can't justify full lockdown until we hit Melbourne's peak. |

I might be more (or less) cautious. I might care more (or less) about the various tradeoffs. I might make a better (or worse) decision were I in charge. That’s Okay. Even with perfect information, values differ.

It’s even fine to be skeptical of data that doesn’t fit my preferred theory. Sometimes Einstein’s right and the data is wrong.

What’s not okay is denying or ignoring the data just because I don’t like the cost of the implied action. Or, funding decades-long FUD campaigns for the same reason.

PS: Here is Linda’s shout suggesting that (only) stage-4 lockdown suppressed Delta:

Arnold Kling: 2021 book titles show epistemological crisis.

This may fit with a historical pattern. The barbarians sack the city, and the carriers of the dying culture repair to their basements to write.

Though some of this goes back at least to Paul Meehl.

HTT: Bob Horn. Again.

In a long post on sustained irrationality in the markets, Vitalik Buterin describes his experience wading into to 2020 election prediction markets:

I decided to make an experiment on the blockchain that I helped to create: I bought $2,000 worth of NTRUMP (tokens that pay $1 if Trump loses) on Augur. Little did I know then that my position would eventually increase to $308,249, earning me a profit of over $56,803, and that I would make all of these remaining bets, against willing counterparties, after Trump had already lost the election. What would transpire over the next two months would prove to be a fascinating case study in social psychology, expertise, arbitrage, and the limits of market efficiency, with important ramifications to anyone who is deeply interested in the possibilities of economic institution design.

There’s a skippable technical section. His take-home is that intellectual underconfidence is a big part of why these markets can stay so wrong for so long.

But nevertheless it seems to me more true than ever that, as goes the famous Yeats quote, “the best lack all conviction, while the worst are full of passionate intensity.”

Thanks to Mike Bishop for alerting me to Jiminez' 100-tweet thread and Lancet paper on the case for COVID-19 aerosols, and the fascinating 100-year history that still shapes debate.

Because of that history, it seems admitting “airborne” or “aerosol” has been quite a sea change. Some of this is important - “droplets” are supposed to drop, while aerosols remain airborne and so circulate farther.

But some seems definitional - a large enough aerosol is hindered by masks, and a small droplet doesn’t drop.

However, point being that like measles and other respiratory viruses, “miasma” isn’t a bad concept, so contagion can travel, esp. indoors.

Please people, if using VAERS, go check the details. @RealJoeSmalley posts stuff like “9 child deaths in nearly 4,000 vaccinations”, but it’s not his responsibility if the data is wrong, caveat emptor.

With VAERS that’s highly irresponsible - you can’t even use VAERS without reading about its limits.

I get 9 deaths in VAERS if I set the limits to “<18”. But the number of total US vaccinations for <18 isn’t 4,000 - it’s 2.2M.

Also I checked the 9 VAERS deaths for <18:

Two are concerning because little/no risk:

Two+ are concerning but seem experimental. AFAIK the vaccines are not approved for breastfeeding, and are only in clinical trial for young children. Don’t try this at home:

Two were very high risk patients. (Why was this even done?):

Two are clearly unrelated:

For evaluating your risk, only the two teens would seem relevant. They might not be vaccine-related, but with otherwise no known risk, it’s a very good candidate cause.

I’m not able to get “saved search” to work, so here’s the non-default Query Criteria:

Group By: VAERS ID

Ben Kuo saved a life using Google Earth and clear thinking.

In biographies and brands, after some clear examples Jacobs notes that many accusations of “factual errors” are really brand defenses:

When they said that Jacobs makes many factual errors, they weren’t even really making a truth claim, they were uttering a spell to ward off the stranger. They were placing me outside their Inner Ring.

~ ~ ~

In vendoring culture, I’m fascinated by the parallels he draws between vendoring code, and what Gene Luen Yang has done by incorporating DC Comics' earliest racist caricature into a new comic:

What Yang has done is moral repair through vendoring code &em; in this case ... cultural code. And note that Yang has not ... simply pointed to code created and maintained by someone else. ...he could only correct it by making it his own.

Had I read both, I still wouldn’t have made that connection. Spark.

~ ~ ~

MacWright:Frictionless note-taking produces notes, but it doesn’t - for me - produce memory.

HTT Nuño Sempere’s January forecasting newsletter. And be sure to check out his marvelous Metaforecast service!

~ ~ ~

So: Metacululs and RootClaim give very different probabilities that COVID-19 originated in a lab (see earlier post summarizing Monk):

Metaculus has ~3K forecasts on that question over the last year+, and over 260 comments, most well-informed. They’ve done well in COVID-19 forecasts vs. experts. (And famously one of their top forecasters nailed the pandemic in late January 2020, as Sempere reminds us.)

Rootclaim, as far as I can tell, begins with some crowdsourcing to formulate hypotheses, get initial probabilities, gather sources, and maybe to help set likelihoods. Then they do a Bayesian update. At one point they used full Bayesian networks. It seems this one treats each evidence-group as independent.

Both are heavily rationalist and Bayesian-friendly, and had access to each other’s forecasts. So the divergence is quite interesting - I wish I had time to dig into it some more.

Worth reading in entirety, Alan Jacobs reflects on an essay by Douthat:

Such a system, predictably, was terrible at generating the kind of outward-facing, evangelistic conservatives who had made the Reagan revolution possible.

He ends by saying Antonin Scalia was the sole survivor of the Old Republic, making Amy Coney Barret the Last Jedi.

(OK, Douthat doesn’t use Star Wars. But Sunstein did. I just blended them.)

Where Douthat muses on Trump’s loss, Ian Leslie reflected on Biden’s victory over both Sanders and Trump:

It's worth spending a bit of time on what it means to be moderate.Three forgotten principles of moderate politics that sparkle because they are both obvious and ignored.

Turns out Leslie has a new book, Conflicted:

Disagreement is the best way of thinking we have. It weeds out weak arguments, improves decision-making, leads to new ideas, and, counter-intuitively, brings us closer to one another. But only if we do it well - and right now, we’re doing it terribly.

Looking forward to it. Mercier & Sperber demonstrated that disagreement is how groups think, and that under the right conditions, they vastly outthink people.

For some gentle advice on How to Think, try Alan Jacobs' book by that name. We all cover the philosophy & cognitive science. Jacobs tackles the human component:

[We describe] argument as war ... because in many arguments there truly is something to be lost, and most often what's under threat is social affiliation. [My bold.]

What to do?

6. Gravitate, as best you can, in every way you can, toward people who seem to value genuine community and can handle disagreement with equanimity.

Gelman’s recent short post on Relevance of Bad Science for Good Science includes a handy Top10 junk list:

A Ted talkin’ sleep researcher misrepresenting the literature or just plain making things up; a controversial sociologist drawing sexist conclusions from surveys of N=3000 where N=300,000 would be needed; a disgraced primatologist who wouldn’t share his data; a celebrity researcher in eating behavior who published purportedly empirical papers corresponding to no possible empirical data; an Excel error that may have influenced national economic policy; an iffy study that claimed to find that North Korea was more democratic than North Carolina; a claim, unsupported by data, that subliminal smiley faces could massively shift attitudes on immigration; various noise-shuffling statistical methods that just won’t go away—all of these, and more, represent different extremes of junk science.

And the following sobering reminder why we study failures:

None of us do all these things, and many of us try to do none of these things—but I think that most of us do some of these things much of the time.

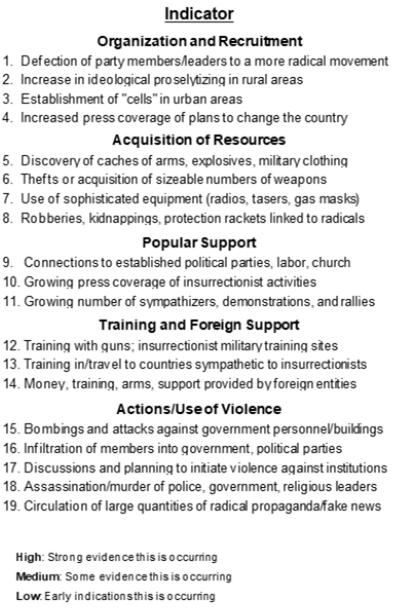

January 6 moved Randy Pherson at Globalytica to ask if there is an active insurrectionist movement in the US.

Before clicking, at least quickly decide whether you would rate these as High, Medium, or Low (image from his post):

Then click to see ratings from a dozen of Pherson’s colleagues - probably professional or retired analysts.