Note: read this paper: stronger LLMs exhibit more cognitive bias. If robust, that would be a very promising result., as I noted in my comment to Kris Wheaton here.

Note: read this paper: stronger LLMs exhibit more cognitive bias. If robust, that would be a very promising result., as I noted in my comment to Kris Wheaton here.

Good grief! They’re still there! Kind-of.

The notebook figures don’t seem to load, nor any details from Gorman’s map. Still, that was more than I expected to find for a web project from 1993, with updates made from grad school into 1995.

This work was from the wonderful undergrad research lab I joined in my junior year. Profs. Bernie Carlson & Mike Gorman were mapping Bell & Edison’s different paths to inventing the telephone.

And wow, some of it’s still there, sporting 1995 “Netscape-specific” web technologies!

Of course a better site now is the Library of Congress

In case I’m ever blessed with teaching again, “The Truth Box” seems a great follow-up to “New Eleusis”, an exercise I inherited from my old undergrad advsior:

They could shake the box, smell the box, remove the sticks, whatever; the only thing they could not do was to open the box or do anything that would cause the box contents to be visually revealed. Their job was to tell me, after eight minutes, what they thought was in the box, and why. I explained that developing good reasoning was very important.

…

The next step was to ask students what would increase their level of certainty about what was in the box. Soon, organically, they were reinventing modern science.

~Alice Dreger, The Truth Box Experiment

I had a few undergrad advisors, but I borrowed “New Eleusis” from Mike Gorman of Simulating Science, and re-inventing the telephone from him and Bernie Carlson. I’ve fond memories of the year or two in their “Repo Lab” at U.Va. helping map Bell & Edison’s notebooks, and making them available on this brand new web thing.

Ugh.

Research-integrity analysts are warning that ‘journal snatchers’ — companies that acquire scholarly journals from reputable publishers — are turning legitimate titles into predatory, low-quality publications with questionable practices.

~Dalmeet Singh Chawla, Invasion of the ‘journal snatchers’, Nature News 17-APR.

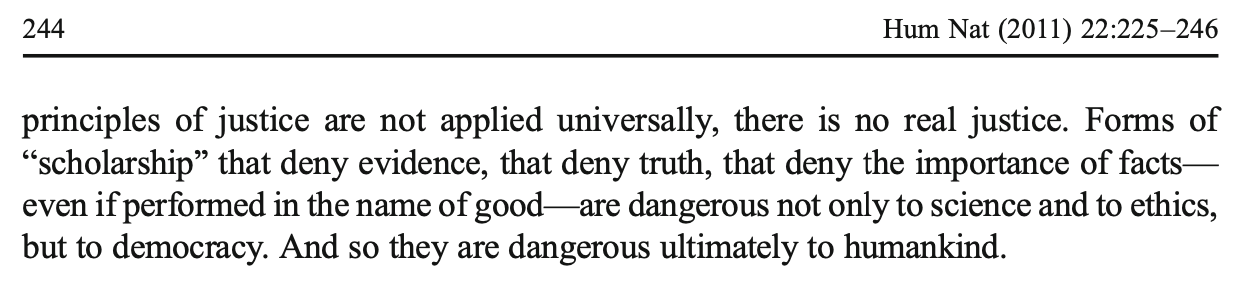

| ❝ | If justice is not based on the facts, if principles of justice are not applied universally, there is no real justice. |

Alice Dreger "Darkness’s Descent on the American Anthropological Association", Hum Nat 2011, pp.243-244.

She expands on in the excellent Galileo’s Middle Finger📚.

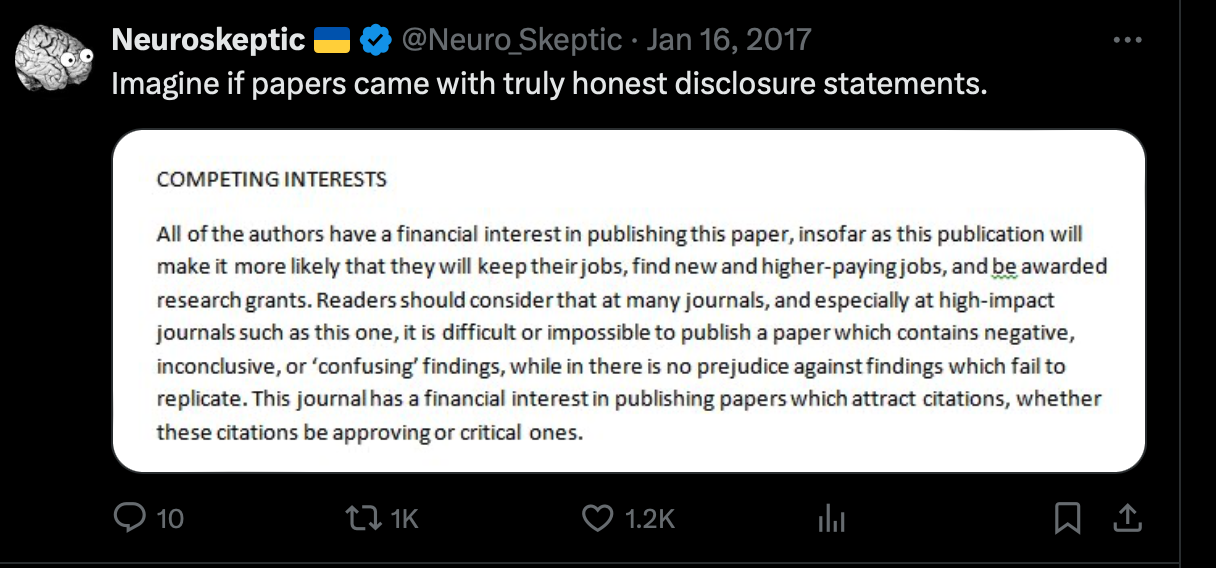

Disclosure:

Interesting abstract from Herzenstein et al suggests that replicable studies are transparent and confident relative to non-replicable ones.

Alas for itself, the abstract is timid. They “allude to the possibility that”.

Even if true, likely temporarily so. But I’ve requested the full text.

Daniel Lakens on why we won’t move beyond p<.05. Key: few really offered alternatives.

Part 2 of an excellent 2-part reflection on the APA special issue 5 years ago.

This is promising: you can run LLM inference and training on 13W of power. I’ve yet to read the research paper, but they found you don’t need matrix multiplication if you adopt ternary [-1, 0, 1] values.

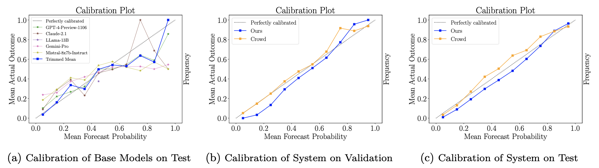

A Berkeley Computer Science lab just uploaded “Approaching Human-Level Forecasting with Language Models” to arXiv DOI:10.48550/arXiv.2402.18563. My take:

There were three things that helped: Study, Scale, and Search, but the greatest of these is Search.

Halawi et al replicated earlier results that off-the-shelf LLMs can’t forecast, then showed how to make them better. Quickly:

Adding news search and fine tuning, the LLMs were decent (Brier .179), and well-calibrated. Not nearly as good as the crowd (.149), but probably (I’m guessing) better than the median forecaster – most of crowd accuracy is usually carried by the top few %. I’m surprised by the calibration.

By far the biggest gain was adding Info-Retrieval (Brier .206 -> .186), especially when it found at least 5 relevant articles.

With respect to retrieval, our system nears the performance of the crowd when there are at least 5 relevant articles. We further observe that as the number of articles increases, our Brier score improves and surpasses the crowd’s (Figure 4a). Intuitively, our system relies on high-quality retrieval, and when conditioned on more articles, it performs better.

Note: they worked to avoid information leakage. The test set only used questions published after the models' cutoff date, and they did sanity checks to ensure the model didn’t already know events after the cutoff date (and did know events before it!). New retrieval used APIs that allowed cutoff dates, so they could simulate more information becoming available during the life of the question. Retrieval dates were sampled based on advertised question closing date, not actual resolution.

Fine-tuning the model improved versus baseline: (.186 -> .179) for the full system, with variants at 0.181-0.183. If I understand correctly, it was trained on model forecasts of training data which had outperformed the crowd but not by too much, to mitigate overconfidence.

That last adjustment – good but not too good – suggests there are still a lot of judgmental knobs to twiddle, risking a garden of forking paths. However, assuming no actual flaws like information leakage, the paper stands as an existence proof of decent forecasting, though not a representative sample of what replication-of-method would find.

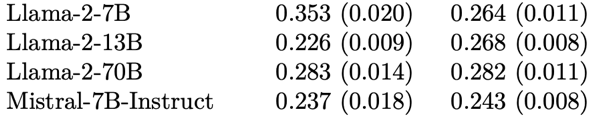

GPT4 did a little better than GPT3.5. (.179 vs .182). And a lot better than lunchbox models like Llama-2.

But it’s not just scale, as you can see below: Llama’s 13B model outperforms its 70B, by a lot. Maybe sophistication would be a better word, but that’s too many syllables for a slogan.

Calibration: was surprisingly good. Some of that probably comes from the careful selection of forecasts to fine-tune from, and some likely from the crowd-within style setup where the model forecast is the trimmed mean from at least 16 different forecasts it generated for the question. [Update: the [Schoenegger et al] paper (also this week) ensembled 12 different LLMs and got worse calibration. Fine tuning has my vote now.]

Forking Paths: They did a hyperparameter search to optimize system configuration like the the choice of aggregation function (trimmed mean), as well as retrieval and prompting strategies. This bothers me less than it might because (1) adding good article retrieval matters more than all the other steps; (2) hyperparameter search can itself be specified and replicated (though I bet the choice of good-but-not-great for training forecasts was ad hoc), and (3) the paper is an existence proof.

Quality: It’s about 20% worse than the crowd forecast. I would like to know where it forms in crowd %ile. However, it’s different enough from the crowd that mixing them at 4:1 (crowd:model) improved the aggregate.

They note the system had biggest Brier gains when the crowd was guessing near 0.5, but I’m unimpressed. (1) this seems of little practical importance, especially if those questions really are uncertain, (2) it’s still only taking Brier of .24 -> .237, nothing to write home about, and (3) it’s too post-hoc, drawing the target after taking the shots.

Overall: A surprising amount of forecasting is nowcasting, and this is something LLMs with good search and inference could indeed get good at. At minimum they could do the initial sweep on a question, or set better priors. I would imagine that marrying LLMs with Argument Mapping could improve things even more.

This paper looks like a good start.

| ❝ | LLMs aren't people, but they act a lot more like people than logical machines. |

Linda McIver and Cory Doctorow do not buy the AI hype.

McIver ChatGPT is an evolutionary dead end:

As I have noted in the past, these systems are not intelligent. They do not think. They do not understand language. They literally choose a statistically likely next word, using the vast amounts of text they have cheerfully stolen from the internet as their source.

Doctorow’s Autocomplete Worshippers:

AI has all the hallmarks of a classic pump-and-dump, starting with terminology. AI isn’t “artificial” and it’s not “intelligent.” “Machine learning” doesn’t learn. On this week’s Trashfuture podcast, they made an excellent (and profane and hilarious) case that ChatGPT is best understood as a sophisticated form of autocomplete – not our new robot overlord.

Not so fast. First, AI systems do understand text, though not the real-world referents. Although LLMs were trained by choosing the most likely word, they do more. Representations matter. How you choose the most likely word matters. A very large word frequency table could predict the most likely word, but it couldn’t do novel word algebra (king - man + woman = ___) or any of the other things that LLMs do.

Second, McIver and Doctorow trade on their expertise to make their debunking claim: we understand AI. But that won’t do. As David Mandel notes in a recent preprint AI Risk is the only existential risk where the experts in the field rate it riskier than informed outsiders.

Google’s Peter Norvig clearly understands AI. And he and colleagues argue they’re already general, if limited:

Artificial General Intelligence (AGI) means many different things to different people, but the most important parts of it have already been achieved by the current generation of advanced AI large language models such as ChatGPT, Bard, LLaMA and Claude. …today’s frontier models perform competently even on novel tasks they were not trained for, crossing a threshold that previous generations of AI and supervised deep learning systems never managed. Decades from now, they will be recognized as the first true examples of AGI, just as the 1945 ENIAC is now recognized as the first true general-purpose electronic computer.

That doesn’t mean he’s right, only that knowing how LLMs work doesn’t automatically dispel claims.

Meta’s Yann LeCun clearly understands AI. He sides with McIver & Doctorow that AI is dumber than cats, and argues there’s a regulatory-capture game going on. (Meta wants more openness, FYI.)

Demands to police AI stemmed from the “superiority complex” of some of the leading tech companies that argued that only they could be trusted to develop AI safely, LeCun said. “I think that’s incredibly arrogant. And I think the exact opposite,” he said in an interview for the FT’s forthcoming Tech Tonic podcast series.

Regulating leading-edge AI models today would be like regulating the jet airline industry in 1925 when such aeroplanes had not even been invented, he said. “The debate on existential risk is very premature until we have a design for a system that can even rival a cat in terms of learning capabilities, which we don’t have at the moment,” he said.

Could a system be dumber than cats and still general?

McIver again:

There is no viable path from this statistical threshing machine to an intelligent system. You cannot refine statistical plausibility into independent thought. You can only refine it into increased plausibility.

I don’t think McIver was trying to spell out the argument in that short post, but as stated this begs the question. Perhaps you can’t get life from dead matter. Perhaps you can. The argument cannot be, “It can’t be intelligent if I understand the parts”.

Doctorow refers to Ted Chiang’s “instant classic”, ChatGPT Is a Blurry JPEG of the Web

[AI] hallucinations are compression artifacts, but—like the incorrect labels generated by the Xerox photocopier—they are plausible enough that identifying them requires comparing them against the originals, which in this case means either the Web or our own knowledge of the world.

I think that does a good job at correcting many mistaken impressions, and correctly deflating things a bit. But also, that “Blurry JPEG” is key to LLM’s abilities: they are compressing their world, be it images, videos, or text. That is, they are making models of it. As Doctorow notes,

Except in some edge cases, these systems don’t store copies of the images they analyze, nor do they reproduce them.

They gist them. Not necessarily the way humans do, but analogously. Those models let them abstract, reason, and create novelty. Compression doesn’t guarantee intelligence, but it is closely related.

Two main limitations of AI right now:

Why not use a century of experience with cognitive measures (PDF) to help quantify AI abilities and gaps?

~ ~ ~

A interesting tangent: Doctorow’s piece covers copyright. He thinks that

Under these [current market] conditions, giving a creator more copyright is like giving a bullied schoolkid extra lunch money.

…there are loud, insistent calls … that training a machine-learning system is a copyright infringement.

This is a bad theory. First, it’s bad as a matter of copyright law. Fundamentally, machine learning … [is] a math-heavy version of what every creator does: analyze how the works they admire are made, so they can make their own new works.

So any law against this would undo what wins creators have had over conglomerates regarding fair use and derivative works.

Turning every part of the creative process into “IP” hasn’t made creators better off. All that’s it’s accomplished is to make it harder to create without taking terms from a giant corporation, whose terms inevitably include forcing you to trade all your IP away to them. That’s something that Spider Robinson prophesied in his Hugo-winning 1982 story, “Melancholy Elephants”.

| ❝ | So if you hear that 60% of papers in your field don’t replicate, shouldn't you care a lot about which ones? Why didn't my colleagues and I immediately open up that paper's supplement, click on the 100 links, and check whether any of our most beloved findings died? _~A. Mastroianni_ |

HTT to the well-read Robert Horn for the link.

After replication failures and more recent accounts of fraud, Elizabeth Gilbert & Nick Hobson ask, Is psychology good for anything?

If the entire field of psychology disappeared today, would it matter? …

Adam Mastroianni, a postdoctoral research scholar at Columbia Business School says: meh, not really.

At the time I replied with something like this:

~ ~ ~ ~ ~

There’s truth to this. I think Taleb noted that Shakespeare and Aeschylus are keener observers of the human condition than the average academic. But it’s good to remember there are useful things in psychology, as Gilbert & Hobson note near the end.

I might add the Weber-Fechner law and effects that reveal mental mechanisms, like:

Losing the Weber-Fechner would be like losing Newton: $F = m a$ reset default motion from stasis to inertia. $p = k log (S/S0)$ – reset sensation from absolute to relative.

7±2 could be 8±3 or 6±1, and there’s chunking. But to lose the idea that short-term memory has but a few fleeting registers would quake the field. And when the default is only ~7, losing or gaining a few is huge.

Stroop, mental rotation, deficits, & brain function are a mix of observation and implied theory. Removing some of this is erasing the moons of Jupiter: stubborn bright spots that rule out theories and strongly suggest alternatives. Stroop is “just” an illusion – but its existence limits independence of processing. Stroop’s cousins in visual search have practical applications from combat & rescue to user-interface design.

Likewise, that brains rotate images constrains mechanism, and informs dyslexic-friendly fonts and interface design.

Neural signals are too slow to track major-league fastballs. But batters can hit them. That helped find some clever signal processing hacks that helps animals perceive moving objects slightly ahead of where they are.

~ ~ ~

But yes, on the whole psychology is observation-rich and theory-poor: cards tiled in a mosaic, not built into houses.

I opened with Mastroanni’s plane crash analogy – if you heard that 60% of your relatives died in a plane crash, pretty soon you’d want to know which ones.

It’s damning that psychology needn’t much care.

| ❝ | And that’s why mistakes had to be corrected. BASF fully recognized that Ostwald would be annoyed by criticism of his work. But they couldn’t tiptoe around it, because they were trying to make ammonia from water and air. If Ostwald’s work couldn’t help them do that, then they couldn’t get into the fertilizer and explosives business. They couldn’t make bread from air. And they couldn’t pay Ostwald royalties. If the work wasn’t right, it was useless to everyone, including Ostwald. |

Adam Russell created the DARPA SCORE replication project. Here he reflects on the importance of Intelligible Failure.

[Advanced Research Projects Agencies] need intelligible failure to learn from the bets they take. And that means evaluating risks taken (or not) and understanding—not merely observing—failures achieved, which requires both brains and guts. That brings me back to the hardest problem in making failure intelligible: ourselves. Perhaps the neologism we really need going forward is for intelligible failure itself—to distinguish it, as a virtue, from the kind of failure that we never want to celebrate: the unintelligible failure, immeasurable, born of sloppiness, carelessness, expediency, low standards, or incompetence, with no way to know how or even if it contributed to real progress.

Sabine Hossenfelder’s Do your own research… but do it right is an excellent guide to critical thinking and a helpful antidote to the meme that no one should “do your own research”.

Based on the abstract, it seems Alexander Bird’s Understanding the replication crisis as a base rate fallacy has it backwards. Is there reason to dig into the paper?

He notes a core feature of the crisis:

If most of the hypotheses under test are false, then there will be many false hypotheses that are apparently supported by the outcomes of well conducted experiments and null hypothesis significance tests with a type-I error rate (α) of 5%.

Then he says this solves the problem:

Failure to recognize this is to commit the fallacy of ignoring the base rate.

But it merely states the problem: Why most published research findings are false.

To fix peer review, break it into stages, a short Nature opinion by Olava Amaral.

Well that’s cool. Gravitational lensing.

HTT the Northern Virginia Astronomy Club mail list. 🌌

Alan Jacobs' Two versions of covid skepticism summarizes a longer piece by Madeleine Kearns. Both are worth reading.

To his quotes I’ll add the core folly Kearns charges both the Covidians and the Skeptics with:

| ❝ |

by making problems that are in essence forever with us seem like a unique historical rupture.

|

Headlines about the death of theory are philosopher clickbait. Fortunately Laura Spinney’s article is more self-aware than the headline:

| ❝ | But Anderson’s [2008] prediction of the end of theory looks to have been premature – or maybe his thesis was itself an oversimplification. There are several reasons why theory refuses to die, despite the successes of such theory-free prediction engines as Facebook and AlphaFold. All are illuminating, because they force us to ask: what’s the best way to acquire knowledge and where does science go from here? |

(Note: Laura Spinney also wrote Pale Rider, a history of the 1918 flu.)

Forget Facebook for a moment. Image classification is the undisputed success of black-box AI: we don’t know how to write a program to recognize cats, but we can train a neural net on lots of picture and “automate the ineffable”.

But we’ve had theory-less ways to recognize cat images for millions of years. Heck, we have recorded images of cats from thousands of years ago. Automating the ineffable, in Kozyrkov’s lovely phrase, is unspeakably cool, but it has no bearing on the death of theory. It just lets machines do theory-free what we’ve been doing theory-free already.

The problem with black boxes is supposedly that we don’t understand what they’re doing. Hence DARPA’s “Third Wave” of Explainable AI. Kozyrkov thinks testing is better than explaining - after all we trust humans and they can’t explain what they’re doing.

I’m more with DARPA than Kozyrkov here: explainable is important because it tells us how to anticipate failure. We trust inexplicable humans because we basically understand their failure modes. We’re limited, but not fragile.

But theory doesn’t mean understanding anyway. That cat got out of the bag with quantum mechanics. Ahem.

Apparently the whole of quantum theory follows from startlingly simple assumptions about information. That makes for a fascinating new Argument from Design, with the twist that the universe was designed for non-humans, because humans neither grasp the theory nor the world it describes. Most of us don’t understand quantum. Well maybe Feynman, though even he suggested he might not really understand.

Though Feynman and others seem happy to be instrumentalist about theory. Maybe derivability is enough. It is a kind of understanding, and we might grant that to quantum.

But then why not grant it to black-box AI? Just because the final thing is a pile of linear algebra rather than a few differential equations?

I think it was Wheeler or Penrose – one of those types anyway – who imagined we met clearly advanced aliens who also seemed to have answered most of our open mathematical questions.

And then imagined our disappointment when we discovered that their highly practical proofs amounted to using fast computers to show they held for all numbers tried so far. However large that bound was, we should be rightly disappointed by their lack of ambition and rigor.

Theory-free is science-free. A colleague (Richard de Rozario) opined that “theory-free science” is a category error. It confuses science with prediction, when science is also the framework where we test predictions, and the error-correction system for generating theories.

Three examples from the article:

Certainly. Since the 1970s when Meehl showed that simple linear regressions could outpredict psychiatrists, clinicians, and other professionals. In later work he showed they could do that even if the parameters were random.

So beating these humans isn’t prediction trumping theory. It’s just showing disciplines with really bad theory.

I admire Tom Griffiths, and any work he does. He’s one of the top cognitive scientists around, and using neural nets to probe the gaps in prospect theory is clever; whether it yield epicycles or breakthroughs it should advance the field.

He’s right that more data means you can support more epicycles. But basic insights Wallace’s MML remain: if the sum of your theory + data is not smaller than the data, you don’t have an explanation.

AlphaFold’s jumping-off point was the ability of human gamers to out-fold traditional models. The gamers intuitively discovered patterns – though they couldn’t fully articulate them. So this was just another case of automating the ineffable.

But the deep nets that do this are still fragile – they fail in surprising ways that humans don’t, and they are subject to bizarre hacks, because their ineffable theory just isn’t strong enough. Not yet anyway.

So we see that while half of success of Deep Nets is Moore’s law and Thank God for Gamers, the other half is tricks to regularize the model.

That is, to reduce its flexibility.

I daresay, to push it towards theory.

Deborah Mayo has a new post on Model Testing and p-values vs. posteriors.

I haven’t read Bickel, but now want to. So thanks for the alert.

Fisher is surely right about facetiously adopting extreme priors – a “million to one” prior shouldn’t happen without some equivalent of a million experiences, and a “model check” on the priors makes sense if you want more than a hypothetical.

But surely, his paragraph about “not capable of finding expression in any calculation” is rhetorical fluff amounting you “you have the wrong model”. If priors are plucked from the air, they can be airily dismissed.

On this note I find his switch to parapsychology puzzling (follow her link to pp. 42-44). His Pleiades example shows that their unlikely clustering makes it hard to accept randomness despite the posterior odds still favoring randomness by 30:1. I think he is arguing that the sheer weight of evidence makes us doubt our prior assumption. And rightly so – how confident were we of that million:1 guesstimate anyway?

But his parapsychology example seems to make the opposite point. Here we (correctly) stick to our prior skepticism, and explain away the surprising results as fabrications, mistakes, etc. Here our prior is informed by many things including a century of failed parapsychology experiments – or more precisely a pattern of promising results falling apart under scrutiny. Like prospects for a new cancer drug, we expect it to let us down.

I like to think that “simple” Bayesian inference over all computable models wouldn’t suffer this problem, because all model classes are included. This being intractable, we typically calculate posteriors inside small convenient model families. If things look very wrong, hopefully we remember we may just have the wrong model. Though probably not before adding some epicycles.

[swapped first two paragraphs; tweaks for clarity]

So this Philosophy Stackexchange answer by bobflux has me thinking, even as I’m about to get my booster shot. It’s long, but well-argued.

Intuition: Antibiotic resistance means you must finish your whole prescription so you kill the whole population, instead of just selecting for resistant ones. There is a similar concern with leaky vaccines.

My short summary, in table form plus three notes:

| Sterilizing (Measles vax) | Leaky (Covid vax) | |

|---|---|---|

| Contagious (e.g. Measles) | Vaccinate lots: balance side-effects & infection. No evolution. | More vax ➛ more resistant. 3 paths: 1) Dengue: ☠️ vaxed; 2) Marek: ☠️ unvaxed; 3) common cold |

| Non-Contagious (e.g. Tetanus) | Get if you want. Little impact on others. | –NA– |

Expanding on those three paths:

Dengue vax caused antibody-dependent enhancement (ADE), where the vaccine increased viral load, making Dengue more deadly to the vaxxed. Ouch.

Marek vax in chickens extends infections of “strains otherwise too lethal to persist”. What used to paralyze and kill old birds is now 100% lethal even to young. All must be vaxxed, and will be carriers.

Common cold: Those with mild case go out and about, spreading mild variants and immunities. Those very sick stay home and spread less. Utilitarians have a moral obligation to host Covid Parties.

~End Summary~

I’m happily on the annual-flu-shot train, esp. on the hope that by the time I’m 70 and need it, my system will have seen a lot of variants. Also, I haven’t heard any worries about breeding more resistant flu. I’ve been assuming for awhile that COVID would follow the common-cold path of becoming prevalent and mild, at worst flu-like with annual vaccines. And given that it’s impossible to avoid exposure, it seems better to get side effects of the spike protein rather than infection effects of the live virus.

Now twice in the last week I’ve come across the 2015 paper about the Marek experiment and am thinking about the evolutionary dynamics, and monocultures. How to tell what path we are on? When is it better to Stoically accept the current infections to spare future generations?

More immediately, should I go through with the booster shot? (Given it’s scheduled for tomorrow, that’s the default and most likely outcome. But I am wondering. )

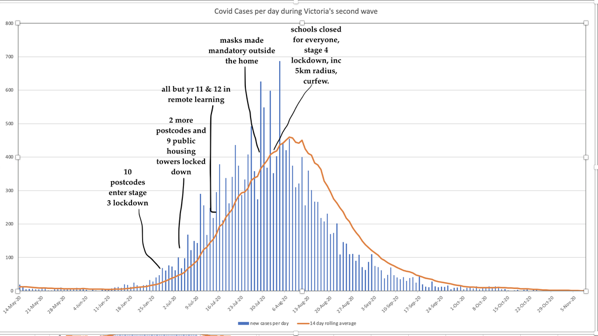

Watching Sydney’s Delta cases repeat the early-phase exponential growth of Melbourne, ADSEI’s Linda McIver asks:

Would our collective understanding of covid have been different if we were all more data literate?

Almost certainly, and I’m all for it. But would that avoid

watching Sydney try all of the “can we avoid really seriously locking down” strategies that we know failed us, … like a cinema audience shouting at the screen,

Not necessarily. Probably not, even, but that’s OK. It would still be a huge step forward to acknowledge the data and decide based on costs, values, and uncertainties. I’m fine with Sydney hypothetically saying,

| ❝ | You're right, it's likely exponential, but we can't justify full lockdown until we hit Melbourne's peak. |

I might be more (or less) cautious. I might care more (or less) about the various tradeoffs. I might make a better (or worse) decision were I in charge. That’s Okay. Even with perfect information, values differ.

It’s even fine to be skeptical of data that doesn’t fit my preferred theory. Sometimes Einstein’s right and the data is wrong.

What’s not okay is denying or ignoring the data just because I don’t like the cost of the implied action. Or, funding decades-long FUD campaigns for the same reason.

PS: Here is Linda’s shout suggesting that (only) stage-4 lockdown suppressed Delta: