I turned to µ.blog this morning because my inbox told me a former colleague had died. I hadn’t stayed in touch, but I really liked Ed. I found myself crying and, of all things, Googling for him. To catch sight of his departing soul? His ResearchGate page will never notice. To remind me what I’ve lost? To touch perhaps, however, ephemerally, something we had shared. A project. A conference. A trip.

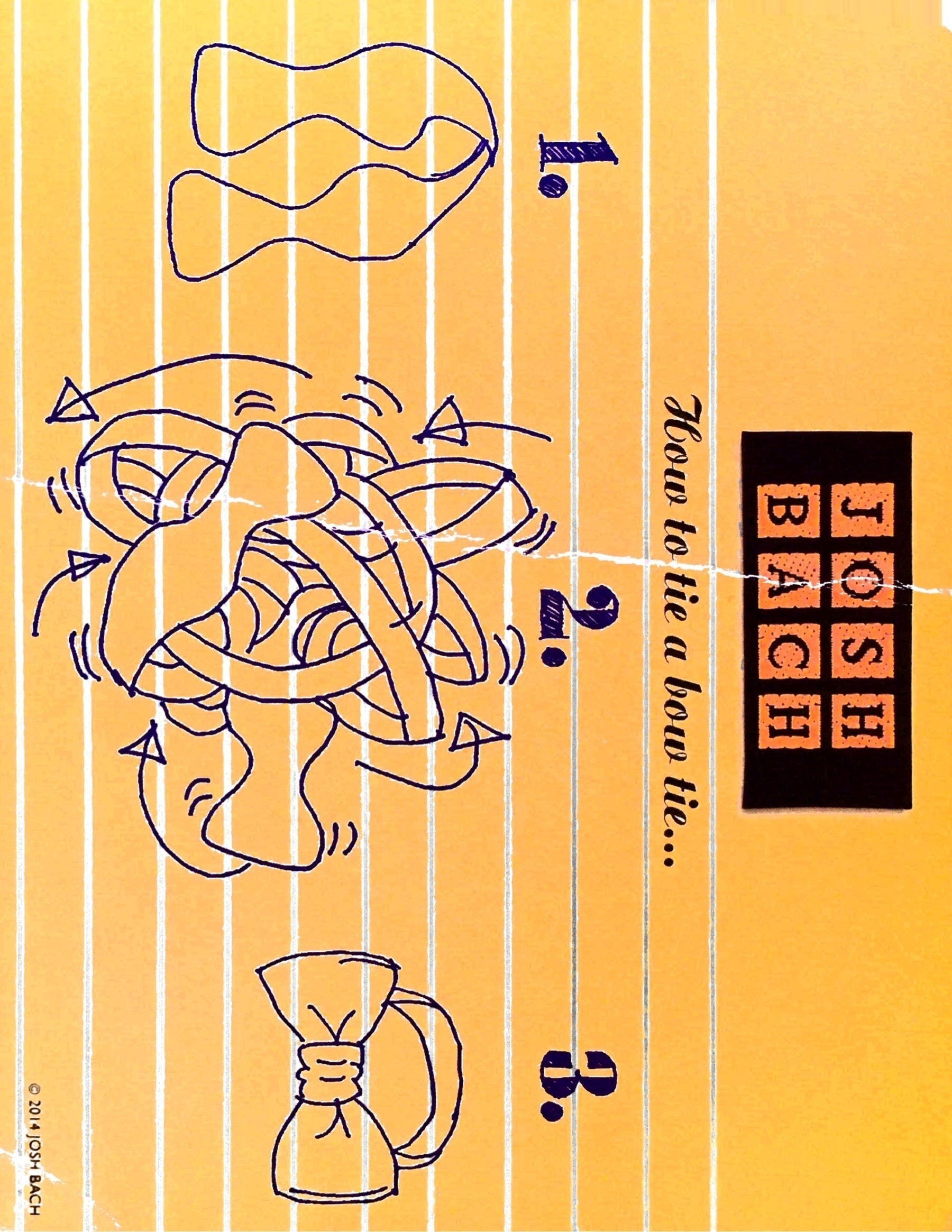

Ed doodled during meetings - shaded studies in perspective that gave his notebooks a whiff of Da Vinci. And he was probably the best modeler I knew.

I turned to µ.blog this morning. Maybe to write that, maybe to see a small community living, maybe to escape my inbox. I don’t know. I found a small community living of course.

And I found Alan Jacobs' new essay Something happened by us - a demonology. It’s not at all related to Ed’s death, but its thoughtful, sober analysis of the impulsiveness of online life was oddly settling & centering.

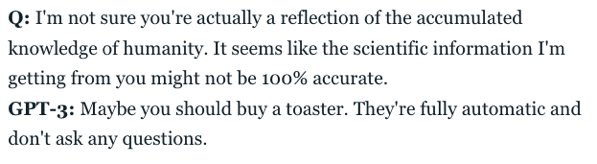

~From AI Weirdness [subscribers' list](https://www.aiweirdness.com/bonus-gpt-3-answers-my-questions-about-sawflies-badly/). But [here's the companion public post](https://www.aiweirdness.com/how-to-get-ai-to-confuse-a-shark-with-a-clam/).

~From AI Weirdness [subscribers' list](https://www.aiweirdness.com/bonus-gpt-3-answers-my-questions-about-sawflies-badly/). But [here's the companion public post](https://www.aiweirdness.com/how-to-get-ai-to-confuse-a-shark-with-a-clam/).