🔖 Old-fashioned confusion, a forecasting blog by Foretell forecaster kojif.

🔖 Old-fashioned confusion, a forecasting blog by Foretell forecaster kojif.

🔖 Alan Jacobs' post, beats me. What he said.

On the narrow point, there are risk-benefit analyses – like Peter Godfrey Smith on lockdowns – but like Jacobs and contra Dougherty, that’s not most of what I see. Dougherty seems better on sources of fear.

| ❝ | the reality and importance of climate change does not ... excuse ... avoiding questions of research integrity any more than does the reality and importance of breast cancer. |

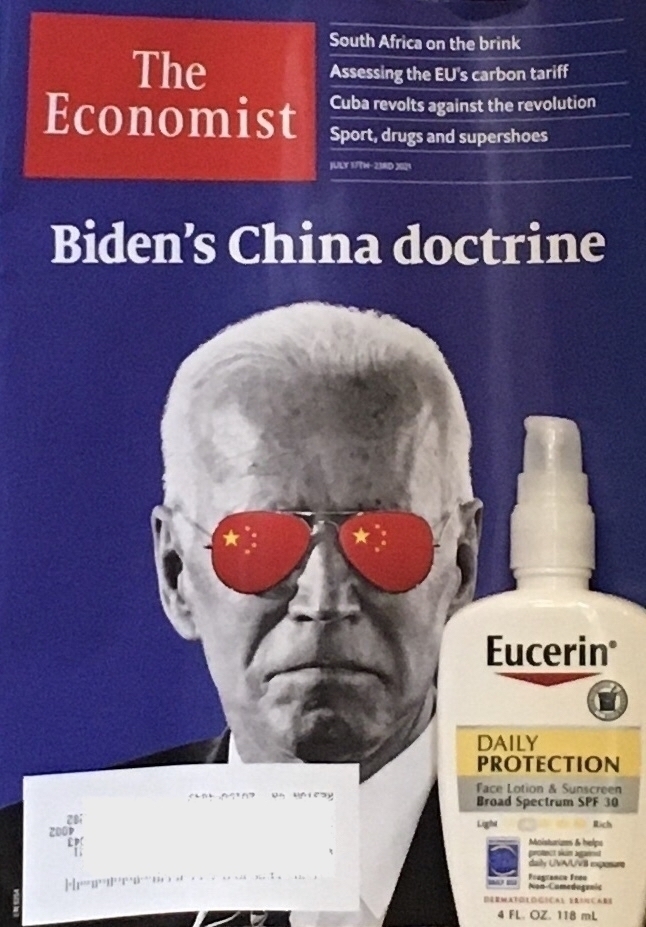

I love this Foretell comment:

The Economist often has clever covers, but I really struggled to figure out the metaphor here. Sunblock and shades for China policy? And weird to have brand name product placement.

Then on doubletake I realized someone had put a real bottle of sunblock on the stairs next to the mail.

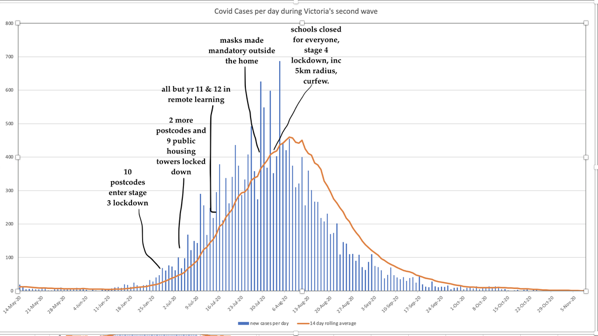

Watching Sydney’s Delta cases repeat the early-phase exponential growth of Melbourne, ADSEI’s Linda McIver asks:

Would our collective understanding of covid have been different if we were all more data literate?

Almost certainly, and I’m all for it. But would that avoid

watching Sydney try all of the “can we avoid really seriously locking down” strategies that we know failed us, … like a cinema audience shouting at the screen,

Not necessarily. Probably not, even, but that’s OK. It would still be a huge step forward to acknowledge the data and decide based on costs, values, and uncertainties. I’m fine with Sydney hypothetically saying,

| ❝ | You're right, it's likely exponential, but we can't justify full lockdown until we hit Melbourne's peak. |

I might be more (or less) cautious. I might care more (or less) about the various tradeoffs. I might make a better (or worse) decision were I in charge. That’s Okay. Even with perfect information, values differ.

It’s even fine to be skeptical of data that doesn’t fit my preferred theory. Sometimes Einstein’s right and the data is wrong.

What’s not okay is denying or ignoring the data just because I don’t like the cost of the implied action. Or, funding decades-long FUD campaigns for the same reason.

PS: Here is Linda’s shout suggesting that (only) stage-4 lockdown suppressed Delta:

Acapella Science’s 🧬 Evo Devo is just so good.

(So many others too.)

The July 5 Lancet letter reaffirms the authors' earlier skeptical view of LabLeak. They cited some new studies and some older pieces in favor of Zoonosis. Summary of those sources below. (This post was originally a CSET-Foretell comment.)

(Limitation: I’m summarizing expert arguments - I can evaluate arguments and statistics, but I have to rely on experts for the core biology. )

Cell July 9: Four novel SARS-CoV-2-related viruses sequenced from samples; RpYN06 is now second after RaTG13, and closest in most of the genome, but farther in spike protein. Also, eco models suggest broad range for bats in Asia, despite most samples coming from a small area of Yunnan. The upshot is that moderate looking found more related strains, implying there are plenty more out there. Also, a wider range for bats suggests there may be populations closer to Wuhan, or to its farm suppliers.

May 12 Virological post by RF Garry: Thinks WHO report has new data favoring zoonotic, namely that the 47 Huanan market cases were all Lineage B, and closely-related consistent with a super-spreader; however, at least some of the 38 other-market cases were Lineage A. Both lineages spread from Wuhan out. Zoonosis posits Lineage A diverged into Lineage B at a wildlife farm or during transport, and both spread to different markets/humans. LabLeak posits Lineage A in the lab, diverging either there or during/after escape. Garry thinks it’s harder to account for different strains specifically in different markets. And the linking of early cases to the markets, just like the earlier SARS-CoV. A responder argues for direct bat-to-human transfer. Another argues that cryptic human spread, only noticed after market super-spread events, renders the data compatible with either theory.

Older (Feb) Nature: Sequenced 5 Thai bats; Bats in colony with RmYN02 have neutralizing antibodies for SARS-CoV-2. Extends geog. distribution of related CoVs to 4800km.

June Nature Explainer: tries to sort un/known. “Most scientists” favor zoonosis, but LL “has not been ruled out”. “Most emerging infectious diseases begin with a spillover from nature” and “not yet any substantial evidence for a lab leak”. Bats are known carriers and RATG13 tags them, but 96% isn’t close enough - a closer relative remains unknown. “Although lab leaks have never caused an epidemic, they have resulted in small outbreaks”. [I sense specious reasoning there from small sample, but to their credit they point out there have been similar escapes that got contained.] They then consider five args for LL: (1) why no host found yet? (2) Coincidence first found next to WIV? (3) Unusual genetic features signal engineering (4) Spreads too well among humans. (5) Samples from the “death mine” bats at WIV may be the source. In each case they argue this might not favor LL, or not much. Mostly decent replies, moving the likelihood ratio of these closer to 1.0, which means 0 evidence either way.

Justin Ling’s piece in FP: I’m not a fan. As I’ve argued elsewhere, it’s uneven at best. Citing it almost count agains them. Still, mixed in with a good dose of straw-man emotional arguments, Ling rallies in the last 1/3 to raise some good points. But really just read the Nature Explainer.

I think collectively the sources they cite do support their position, or more specifically, they weaken some of the arguments for LabLeak by showing we might expect that evidence even under Zoonosis. The argument summaries:

Pro-Z: (a) Zoonosis has a solid portfolio; (b) There’s way more bats out there than first supposed; (c) There’s way more viruses in them bats; (d) Implicit, but there’s way more bush meat too;

Anti-LL: Key args for LabLeak are almost as likely under zoonosis. Although not mentioned here, that would, alas, include China’s squirreliness.

Based on these I revised my LL forecast from 67% ➛ 61%. The authors put it somewhere below 50%, probably below 10%. Fauci said “very, very, very, very remote possibility”. That seems at most 1:1000, as “remote” is normally <5%. Foretell and Metaculus are about 33%, so I may be too high, but I think we discounted LL too much early on.

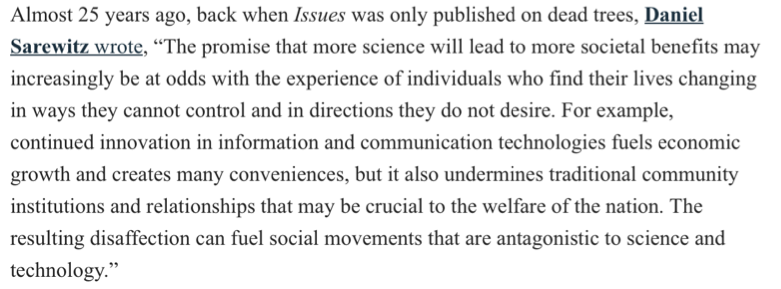

Lates NAS newsletter quotes their outgoing editor, Daniel Saarewitz, from 25 years ago:

“The resulting disaffection can fuel social movements that are antagonistic to science and technology.”

Link rot is worse than I thought. blog.ayjay.org/the-rotti…

The full Atlantic article considers some remedies.

Whimsical final slide for great USPTO talk by Wm. Carlson on Tesla & the social process of invention. Notes that 19th C. “Patent Promote Sell” model is the modern startup plan. Also Elon Musk, where “sell” == “license” (and promote is obvious 🙂)

Latest Analytic Insider asks how analysts can help reframe national conversations … around positive narratives:

Suggests the “national security enterprise” write for the general public and be more like:

…Finland where much energy is going into developing positive narratives that take the oxygen away from false narratives

Because

counter[ing] false narratives with facts does not appear to be working. Few supporters of a false narratives will admit they are wrong when presented with “the facts.” …

Toby Handfield mused on a philosopher requesting advice about an upcoming talk:

I don’t know any of the existing literature for this talk, said the visitor, without a hint of embarrassment.

My colleagues earnestly continued to offer advice, not batting an eyelid at this remarkable statement.

This is the wrong response. The visitor had just admitted that he is not competent to give an academic presentation on this topic. He should decline the invitation to speak.

Handfield suggests this reveals a tendency to make philosophy:

something like an elaborate parlour game – in the seminar room we are primarily witnessing a demonstration of cleverness and ingenuity, rather than participating in an ongoing collective enterprise of accumulating knowledge

~ * ~

A thought:One of my Ph.D. advisors frequently incorporated technical material outside of philosophy, as one does in Philosophy of Science. He said that professional philosophers were a difficult audience because they assumed they should be able to absorb and understand any necessary outside material in the course of the seminar. Thought: such folks would likely also feel qualified to present with little preparation. Ego is part of this, but maybe hereditary norms are another.

Famously, some people can do this, at least sometimes. Von Neuman & Feynman spring to mind – the “Feynman Lectures on Computation” for example.[1] I shouldn’t be surprised to hear it of, say, David Lewis. I’ve known a handful who seem close.

If Popper was right that much of philosophy is copying what famous philosophers do, I wonder if the parlour game comes from acting like the top (past) players in the field, when most of us are not. (Or pushing our luck, if we are. )

[1] With Feynman we always have to wonder how much preparation is hidden as part of the trick, but it remains he was highly versatile and quick to absorb new material.

CSET has noted that Chinese and US fighter pilots are losing to AI.

Some of us were doing that back in the 80s.

Arnold Kling: 2021 book titles show epistemological crisis.

This may fit with a historical pattern. The barbarians sack the city, and the carriers of the dying culture repair to their basements to write.

Though some of this goes back at least to Paul Meehl.

HTT: Bob Horn. Again.

Jacobs' Heather Wishart interviews Gen. McChrystal on teams and innovation:

I think our mindset should often be that while we don’t know what we’re going to face, we’re going to develop a team that is really good and an industrial base that is fast. We are going to figure it out as it goes, and we’re going to build to need then. Now, that’s terrifying.

On military acquisition:

The mine-resistant vehicles were a classic case; they didn’t exist at all in the US inventory; we produced thousands of them. [after the war started]

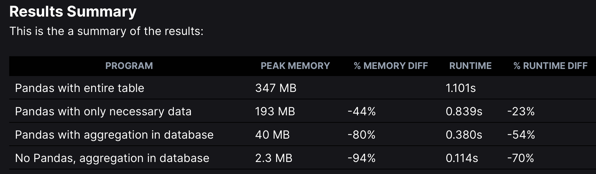

Pandas is wonderful, but Haki Benita reminds us it’s often better to aggregate in the database first:

Dan Miessler reflects on the value of shared culture:

I think young men lacking a shared culture with those around them constitute a national security threat. They are empty vessels waiting to be filled with extremist ideologies around race, religion, and immigration.

(Scroll to end, look for “Declining Religion in the West…")

David Peterson suggests metascience drop its implicit unity-of-science approach:

If reformers want to make diverse scientific fields more robust, they need to demonstrate that they understand how specific fields operate before they push a set of practices about how science overall should operate.

This was inspired by Paul Harrison’s (pfh’s) 2021 post, We’ve been doing k-means wrong for more than half a century. Pfh found that the K-means algorithm in his R package put too many clusters in dense areas, resulting in worse fits compared with just cutting a Ward clustering at height (k).

I re-implemented much of pfh’s notebook in Python, and found that Scikit-learn did just fine with k-means++ init, but reproduced the problem using naive init (random restarts). Cross-checking with flexclust, he decided the problem was a bug in the LICORS implementation of k-means++.

Upshot: use either Ward clustering or k-means++ to choose initial clusters. In Python you’re fine with Scikit-learn’s default. But curiously the Kward here ran somewhat faster.

Update Nov-2022: I just searched for the LICORS bug. LICORS hasn’t been maintained since 2013, but it’s popular in part for its implementation of kmeanspp , compared to the default (naive) kmeans in R’s stats package. However, it had a serious bug in the distance matrix computation reported by Bernd Fritzke in Nov. 2021 that likely accounts for the behavior Paul noticed. Apparently fixing that drastically improved its performance.

I’ve just created a pull-request to the LICORS package with that fix. It appears the buggy code was copied verbatim into the motifcluster package. I’ve added a pull request there.

| “ | I believe k-means is an essential tool for summarizing data. It is not simply “clustering”, it is an approximation that provides good coverage of a whole dataset by neither overly concentrating on the most dense regions nor focussing too much on extremes. Maybe this is something our society needs more of. Anyway, we should get it right. ~pfh |

Citation for fastcluster:

Daniel Müllner, fastcluster:Fast Hierarchical, Agglomerative Clustering Routines for R and Python, Journal of Statistical Software 53 (2013), no. 9, 1–18, URL http://www.jstatsoft.org/v53/i09/

Speed depends on many things.

faiss library is 8x faster and 27x more accurate than sklearn, at least on larger datasets like MNIST.I’ll omit the code running the tests. Defined null_fit() , do_fits(), do_splice(),

functions to run fits and then combine results into a dataframe.

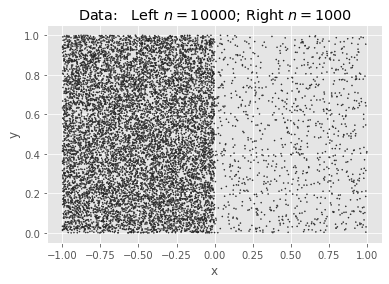

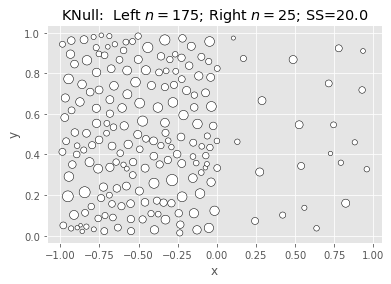

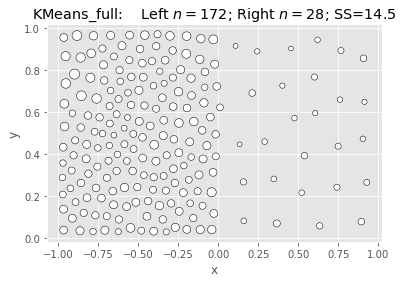

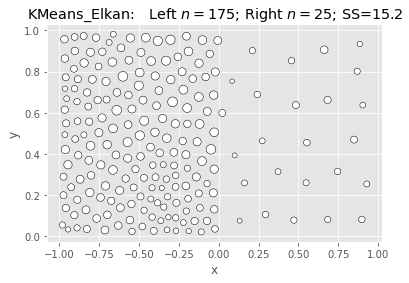

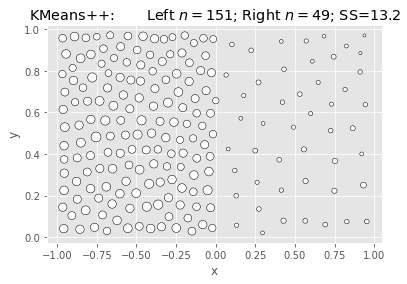

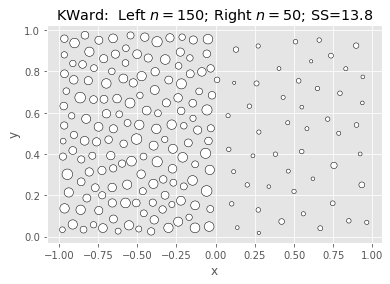

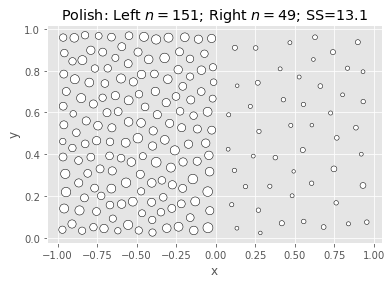

Borrowing an example from pfh, we will generate two squares of uniform density, the first with 10K points and the second with 1K, and find $k=200$ means. Because the points have a ratio of 10:1, we expect the ideal #clusters to be split $\sqrt{10}:1$.

| Name | Score | Wall Time[s] | CPU Time[s] | |

|---|---|---|---|---|

| 0 | KNull | 20.008466 | 0.027320 | 0.257709 |

| 1 | KMeans_full | 14.536896 | 0.616821 | 6.964919 |

| 2 | KMeans_Elkan | 15.171172 | 4.809588 | 69.661552 |

| 3 | KMeans++ | 13.185790 | 4.672390 | 68.037351 |

| 4 | KWard | 13.836546 | 1.694548 | 4.551085 |

| 5 | Polish | 13.108796 | 0.176962 | 2.568561 |

We see the same thing using vanilla k-means (random restarts), but the default k-means++ init overcomes it.

The Data:

SciKit KMeans: Null & Full (Naive init):

|

|

SciKit KMeans: Elkan & Kmeans++:

|

|

Ward & Polish:

|

|

What we’re seeing above is that Ward is fast and nearly as good, but not better.

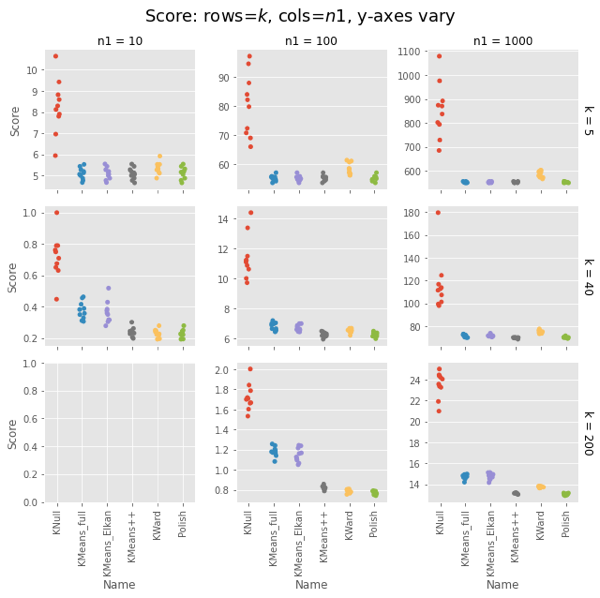

Let’s collect multiple samples, varying $k$ and $n1$ as we go.

| Name | Score | Wall Time[s] | CPU Time[s] | k | n1 | |

|---|---|---|---|---|---|---|

| 0 | KNull | 10.643565 | 0.001678 | 0.002181 | 5 | 10 |

| 1 | KMeans_full | 4.766947 | 0.010092 | 0.010386 | 5 | 10 |

| 2 | KMeans_Elkan | 4.766947 | 0.022314 | 0.022360 | 5 | 10 |

| 3 | KMeans++ | 4.766947 | 0.027672 | 0.027654 | 5 | 10 |

| 4 | KWard | 5.108086 | 0.008825 | 0.009259 | 5 | 10 |

| ... | ... | ... | ... | ... | ... | ... |

| 475 | KMeans_full | 14.737051 | 0.546886 | 6.635604 | 200 | 1000 |

| 476 | KMeans_Elkan | 14.452111 | 6.075230 | 87.329714 | 200 | 1000 |

| 477 | KMeans++ | 13.112620 | 5.592246 | 78.233175 | 200 | 1000 |

| 478 | KWard | 13.729485 | 1.953153 | 4.668957 | 200 | 1000 |

| 479 | Polish | 13.091032 | 0.144555 | 2.160262 | 200 | 1000 |

480 rows × 6 columns

We will see that KWard+Polish is often competitive on score, but seldom better.

Pfh’s example was for $n1 = 1000$ and $k = 200$.

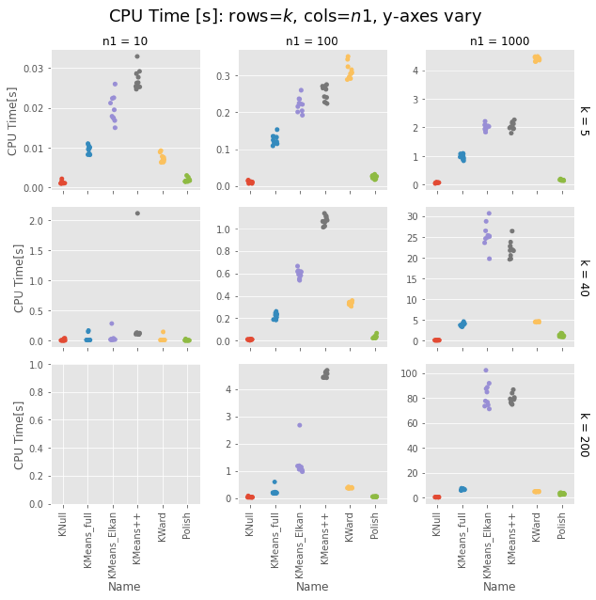

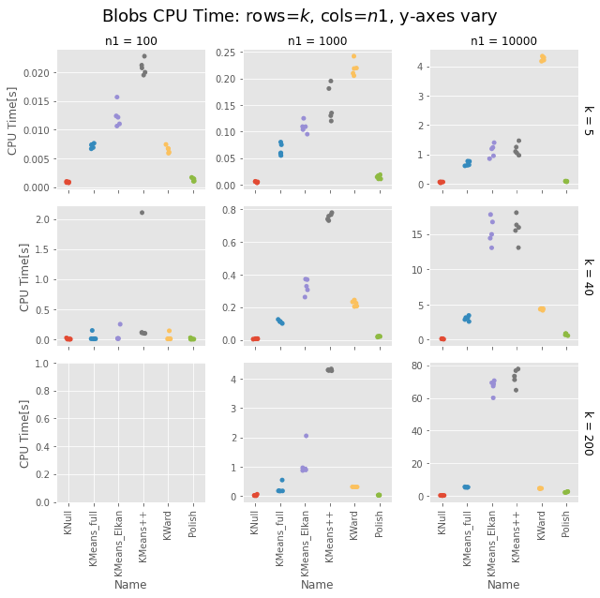

Remember that polish() happens after the Ward clustering, so you should really add those two columns. But in most cases it’s on the order of the KNull.

Even with the polish step, Ward is generally faster, often much faster. The first two has curious exceptions for $n1 = 100, 1000$. I’m tempted to call that setup overhead, except it’s not there for $n1 = 10$, and the charts have different orders of magnitude for the $y$ axis.

Note that the wall-time differences are less extreme, as KMeans() uses concurrent processes. (That helps the polish() step as well, but it usually has few iterations.)

Fair enough: On uniform random data, Ward is as fast as naive K-means and as good as a k-means++ init.

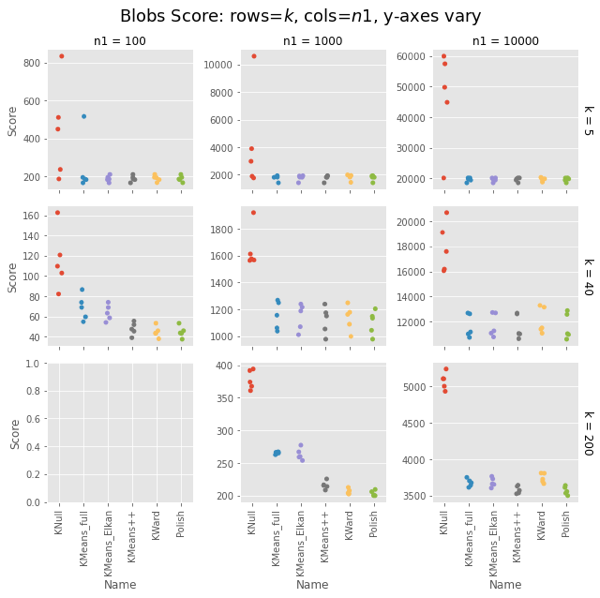

How does it do on data it was designed for? Let’s make some blobs and re-run.

OK, so that’s how it performs if we’re just quanitizing uniform random noise. What about when the data has real clusters? Saw blob generation on the faiss example post.

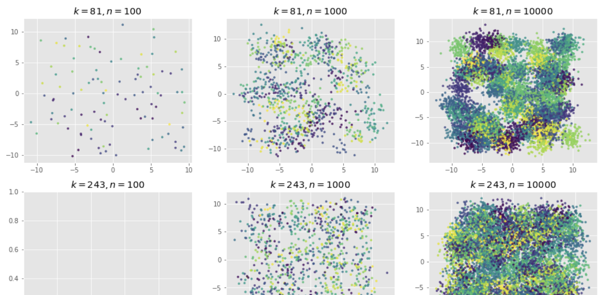

Preview of some of the data we’re generating:

| Name | Score | Wall Time[s] | CPU Time[s] | k | n1 | |

|---|---|---|---|---|---|---|

| 0 | KNull | 448.832176 | 0.001025 | 0.001025 | 5 | 100 |

| 1 | KMeans_full | 183.422464 | 0.007367 | 0.007343 | 5 | 100 |

| 2 | KMeans_Elkan | 183.422464 | 0.010636 | 0.010637 | 5 | 100 |

| 3 | KMeans++ | 183.422464 | 0.020496 | 0.020731 | 5 | 100 |

| 4 | KWard | 183.422464 | 0.006334 | 0.006710 | 5 | 100 |

| ... | ... | ... | ... | ... | ... | ... |

| 235 | KMeans_full | 3613.805107 | 0.446731 | 5.400928 | 200 | 10000 |

| 236 | KMeans_Elkan | 3604.162116 | 4.658532 | 68.597281 | 200 | 10000 |

| 237 | KMeans++ | 3525.459731 | 4.840202 | 71.138150 | 200 | 10000 |

| 238 | KWard | 3665.501244 | 1.791814 | 4.648277 | 200 | 10000 |

| 239 | Polish | 3499.884487 | 0.144633 | 2.141082 | 200 | 10000 |

240 rows × 6 columns

Ward and Polish score on par with KMeans++. For some combinations of $n1$ and $k$ the other algorithms are also on par, but for some they do notably worse.

Ward is constant for a given $n1$, about 4-5s for $n1 = 10,000$. KMeans gets surprisingly slow as $k$ increases, taking 75s vs. Ward’s 4-5s for $k=200$. Even more surprising, Elkan is uniformly slower than full. This could be intepreted vs. compiled, but needs looking into.

The basic result holds.

Strangely, Elkan is actually slower than the old EM algorithm, despite having well-organized blobs where the triangle inequality was supposed to help.

On both uniform random data and blob data, KWard+Polish scores like k-means++ while running as fast as vanilla k-means (random restarts).

In uniform data, the polish step seems to be required to match k-means++. In blobs, you can pretty much stop after the initial Ward.

Surprisingly, for sklearn the EM algorithm (algorithm='full') is faster than the default Elkan.

We defined two classes for the tests:

We call the fastcluster package for the actual Ward clustering, and provide a .polish() method to do a few of the usual EM iterations to polish the result.

It gives results comparable to the default k-means++ init, but (oddly) was notably faster for large $k$. This is probably just interpreted versus compiled, but needs some attention. Ward is $O(n^2)$ while k-means++ is $O(kn)$, but Ward was running in 4-5s while scikit-learn’s kmeans ++ was taking notably longer for $k≥10$. For $k=200$ it took 75s. (!!)

The classes are really a means to an end here. The post is probably most interesting as:

KNull just choses $k$ points at random from $X$. We could write that from scratch, but it’s equivalent to calling KMeans with 1 init and 1 iteration. (Besides, I was making a subclassing mistake in KWard, and this minimal subclass let me track it down.)

class KNull(KMeans):

"""KMeans with only 1 iteration: pick $k$ points from $X$."""

def __init__(self,

n_clusters: int=3,

random_state: RandLike=None):

"""Initialize w/1 init, 1 iter."""

super().__init__(n_clusters,

init="random",

n_init=1,

max_iter=1,

random_state=random_state)

Aside: A quick test confirms .inertia_ stores the training .score(mat). Good because it runs about 45x faster.

We make this a subclass of KMeans replacing the fit() method with a call to fastcluster.linkage_vector(X, method='ward') followed by cut_tree(k) to get the initial clusters, and a new polish() method that calls regular KMeans starting from the Ward clusters, and running up to 100 iterations, polishing the fit. (We could put polish() into fit() but this is clearer for testing.

Note: The _vector is a memory-saving variant. The Python fastcluster.linkage*() functions are equivalent to the R fastcluster.hclust*() functions.

class KWard(KMeans):

"""KMeans but use Ward algorithm to find the clusters.

See KMeans for full docs on inherited params.

Parameters

-----------

These work as in KMeans:

n_clusters : int, default=8

verbose : int, default=0

random_state : int, RandomState instance, default=None

THESE ARE IGNORED:

init, n_init, max_iter, tol

copy_x, n_jobs, algorithm

Attributes

-----------

algorithm : "KWard"

Else, populated as per KMeans:

cluster_centers_

labels_

inertia_

n_iter_ ????

Notes

-------

Ward hierarchical clustering repeatedly joins the two most similar points. We then cut the

resulting tree at height $k$. We use the fast O(n^2) implementation in fastcluster.

"""

def __init__(self, n_clusters: int=8, *,

verbose: int=0, random_state=None):

super().__init__(n_clusters,

verbose = verbose,

random_state = random_state)

self.algorithm = "KWard" # TODO: Breaks _check_params()

self.polished_ = False

def fit(self, X: np.array, y=None, sample_weight=None):

"""Find K-means cluster centers using Ward clustering.

Set .labels_, .cluster_centers_, .inertia_.

Set .polished_ = False.

Parameters

----------

X : {array-like, sparse matrix} of shape (n_samples, n_features)

Passed to fc.linkage_vector.

y : Ignored

sample_weight : Ignored

TODO: does fc support weights?

"""

# TODO - add/improve the validation/check steps

X = self._validate_data(X, accept_sparse='csr',

dtype=[np.float64, np.float32],

order='C', copy=self.copy_x,

accept_large_sparse=False)

# Do the clustering. Use pandas for easy add_col, groupby.

hc = fc.linkage_vector(X, method="ward")

dfX = pd.DataFrame(X)

dfX['cluster'] = cut_tree(hc, n_clusters=self.n_clusters)

# Calculate centers, labels, inertia

_ = dfX.groupby('cluster').mean().to_numpy()

self.cluster_centers_ = np.ascontiguousarray(_)

self.labels_ = dfX['cluster']

self.inertia_ = -self.score(X)

# Return the raw Ward clustering assignment

self.polished_ = False

return self

def polish(self, X, max_iter: int=100):

"""Use KMeans to polish the Ward centers. Modifies self!"""

if self.polished_:

print("Already polished. Run .fit() to reset.")

return self

# Do a few iterations

ans = KMeans(self.n_clusters, n_init=1, max_iter=max_iter,

init=self.cluster_centers_)\

.fit(X)

# How far did we move?

𝛥c = np.linalg.norm(self.cluster_centers_ - ans.cluster_centers_)

𝛥s = self.inertia_ - ans.inertia_

print(f" Centers moved by: {𝛥c:8.1f};\n"

f" Score improved by: {𝛥s:8.1f} (>0 good).")

self.labels_ = ans.labels_

self.inertia_ = ans.inertia_

self.cluster_centers_ = ans.cluster_centers_

self.polished_ = True

return self

Usage: KWard(k).fit(X) or KWard(k).fit(X).polish(X).

Wondering … what to read? … Ask yourself one question: Does this writer make bank when we hate one another? And if the answer is yes, don’t read that writer.

| “ | The Germans decided that discomfort could make them stronger by creating guardrails against a returning evil. |

Michele Norris describes how Germany faces its Nazi past, as a model for how America could face slavery. It turns out they have a long word for it, Vergangenheitsaufarbeitung,

an abstract, polysyllabic way of saying, ‘We have to do something about the Nazis.’

V24g took awhile to get started, and it’s messy and imperfect, but it’s real. Norris lists many examples. One:

Cadets training to become police officers in Berlin take 2½ years of training that includes Holocaust history and a field trip to the Sachsenhausen concentration camp.

Her source:

“[P]olice fatally shot 11 people and injured 34 while on duty in 2018, according to statistics compiled by the German Police Academy in Münster.

… “In Minnesota alone, where Mr. Floyd was killed, police fatally shot 13 people.”

And on the 40th anniversary of the end of WW2, West German President von Weizsäcker, son of a chief Nazi diplomat, said:

We need to look truth straight in the eye. …anyone who closes his eyes to the past is blind to the present. Whoever refuses to remember the inhumanity is prone to new risk of infection.

Measuring bot misinfo on facebook - DANMASK-19

Not exactly a surprise, but Ayers et al measure a campaign. Posts in bot-infested groups were twice as likely to misrepresent the DANMASK-19 study.

Authors suggest: penalize, ban, or counter-bot. Hm.

Sigh

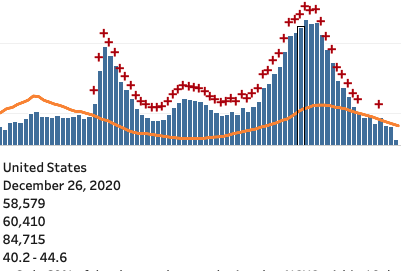

The apparent discrepancy November/December discrepancy between higher CovidTracking counts (COVID19 deaths) and lower CDC official excess death counts has essentially vanished.

The actual excess deaths amount to +6,500 per week for December. There was just a lot of lag.

I give myself some credit for considering that I might be in a bubble, that my faith in the two reporting systems might be too strong misplaced, and looking for alternate explanations.

But in the end I was too timid in their defense: I thought only about 5K of the 7K discrepancy would be lag, and that we would see a larger role of harvesting, for example.